Illustration

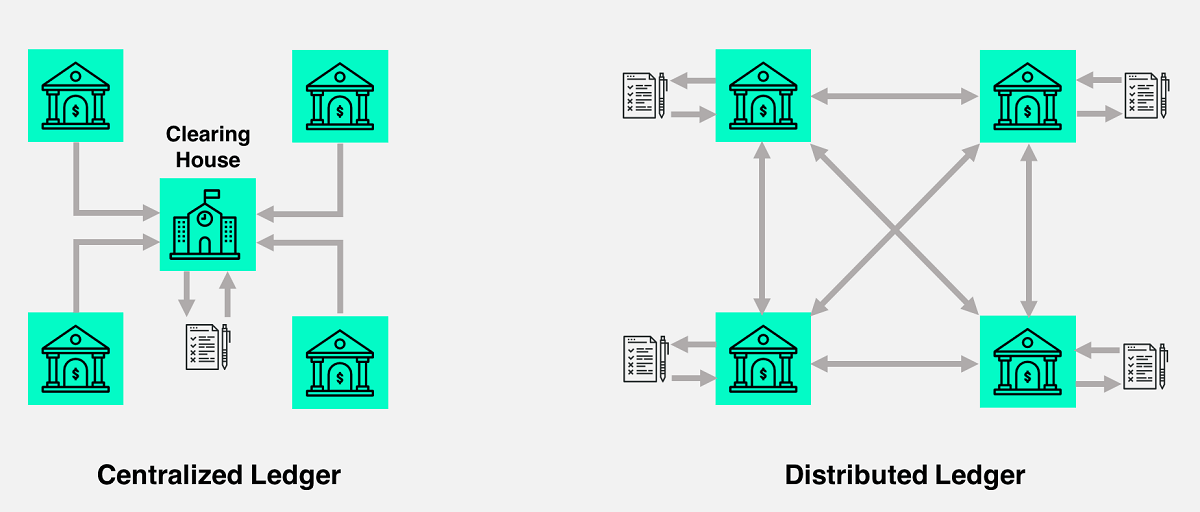

1: Elimination of Intermediary: Move from Centralized Ledger to

Distributed Ledger

Illustration

1: Elimination of Intermediary: Move from Centralized Ledger to

Distributed Ledger

Company / Organization:

Scope:

Version:

File-name:

Author: blockchain--s0-v1@becke.ch

Copyright © 2018 becke.ch – All rights reserved

Document Version History

|

Version |

Date |

Author |

Description |

|---|---|---|---|

|

1.0.0 |

21.05.2018 |

Raoul Becke |

Modified this document according to requirement below 1.0.0. |

|

1.1.0 |

14.09.2018 |

Raoul Becke |

Modified this document according to requirement below 1.1.0. |

Module / Artifact / Component / Work-Product Version History

|

Version |

Date |

Author |

Requirements |

Components Changed |

|---|---|---|---|---|

|

1.0.0 |

21.05.2018 |

Raoul Becke |

Create documentation on: Blockchain, Ethereum Overview (Networks, Nodes, Cryptocurrency Wallet, Smart Contracts, DApps, Ether, Ethereum Virtual Machine), Nodes (Parity, Infura), Cryptocurrency Wallet (MetaMask), Testnet (Ropsten), Solidity Programming Language (Development, Compilation), web3.js (Installation, Integration, Instantiation, Sign Transaction, Callbacks, API), Deployment (web3), Truffle, Tools |

This document |

|

1.1.0 |

14.09.2018 |

Raoul Becke |

Restructured the entire document, new “Introduction and Overview” chapter, new “Architecture Overview” chapter, new chapter on “Fork”, reworked chapter “Parity” regarding Docker and Proof of Authority, extended chapter "Solidity – Smart Contract Programming Language" regarding samples and language specification, reworked chapter "web3.js - Ethereum JavaScript API" regarding packages and security, moved chapter “Truffle” in the appendix, moved chapter “Azure Blockchain Workbench” in the appendix, moved all “Error & Solution” chapters in the appendix |

This document |

Table of Contents

1. Introduction and Overview 8

1.1.2. Communication Protocol 10

1.1.2.1. Light Ethereum Subprotocol (LES) 10

2.2.1. HD (Hierarchical Deterministic) wallet & mnemonics 16

3.2. The Ethereum Virtual Machine 18

3.2.5. Storage, Memory and the Stack 19

3.2.8. Delegatecall / Callcode and Libraries 19

3.3.5. Networks & Chains (Configuration) 25

3.3.6. Proof of Authority (Consensus Algorithm Configuration) 27

3.3.6.1. Setting up a Proof of Authority network 27

3.3.6.2.1. Non-reporting contract: safeContract 31

3.3.6.2.2. Reporting Contract: contract 33

3.4.1. Installation and configuration 36

3.4.1.1. Import existing DEN (from Ganache) 39

3.5. Ropsten (Test Network) 40

3.6. Solidity – Smart Contract Programming Language 42

3.6.1.1. Structure of a Contract 44

3.6.1.3. Units and Globally Available Variables 46

3.6.1.3.3. Special Variables and Functions 46

Block and Transaction Properties 46

3.6.1.4. Expressions and Control Structures 46

3.6.1.5.1. Visibility and Getters 47

3.7. web3.js - Ethereum JavaScript API 49

3.7.1. Installation & Integration 49

3.7.2. Instantiation – setting a provider 50

3.7.2.1. Proprietary (HD Wallet) Provider 51

3.7.3.1. web3.eth.accounts.create 51

3.7.4.1. web3.eth.accounts.wallet 52

3.7.4.2. web3.eth.accounts.signTransaction 53

3.7.4.3. web3.eth.personal.unlockAccount 54

3.7.4.4. Proprietary & Tool Specific 54

4.1.2. Solidity Development 62

4.2.3. Solidity Development 66

6.2. Glossary (terms, abbreviations, acronyms) 71

A. Appendix - Truffle (Ethereum Development Framework) 72

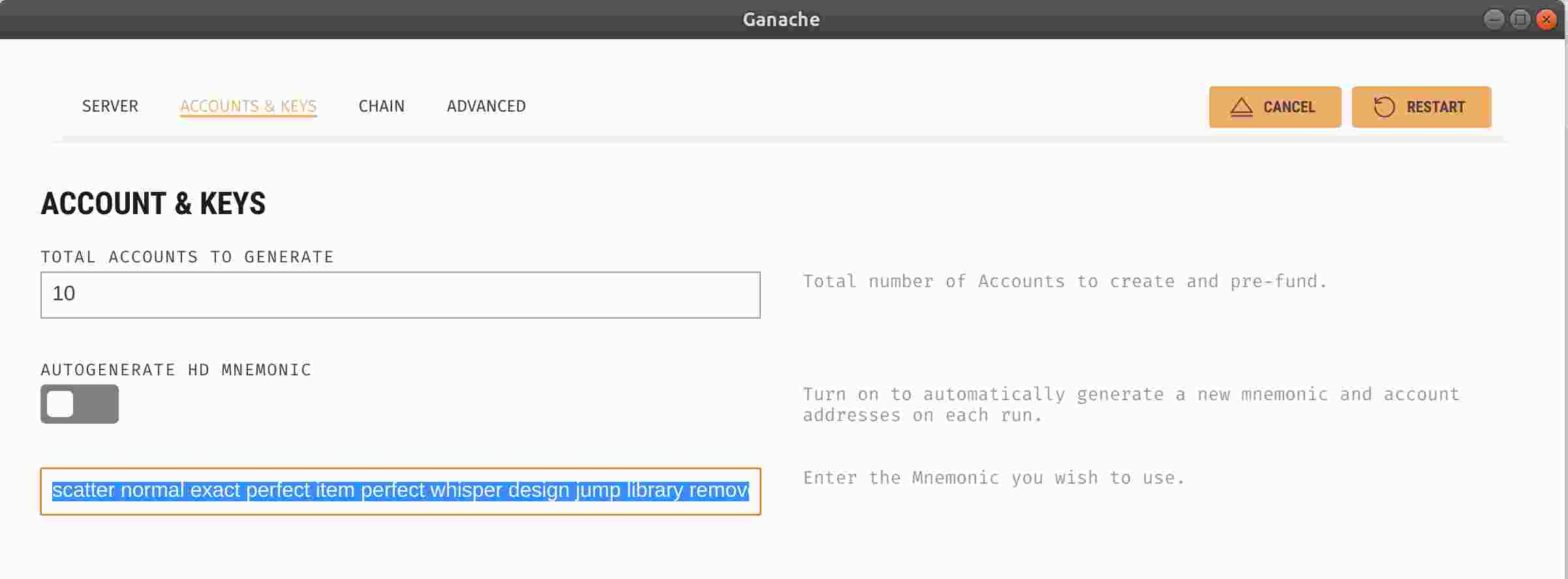

A.2. Development (Ethereum Pet Shop) 72

A.2.2. Create directory structure: 72

A.2.3. Download and install: 73

A.2.4. Writing the smart contract 73

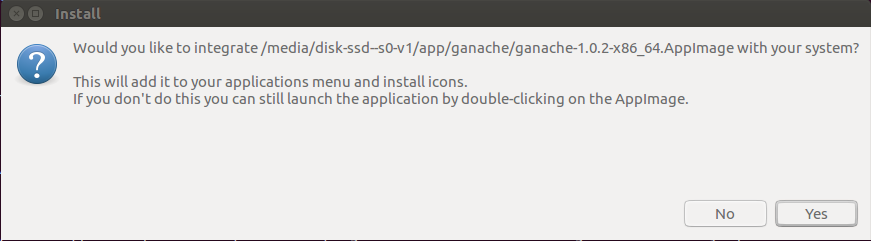

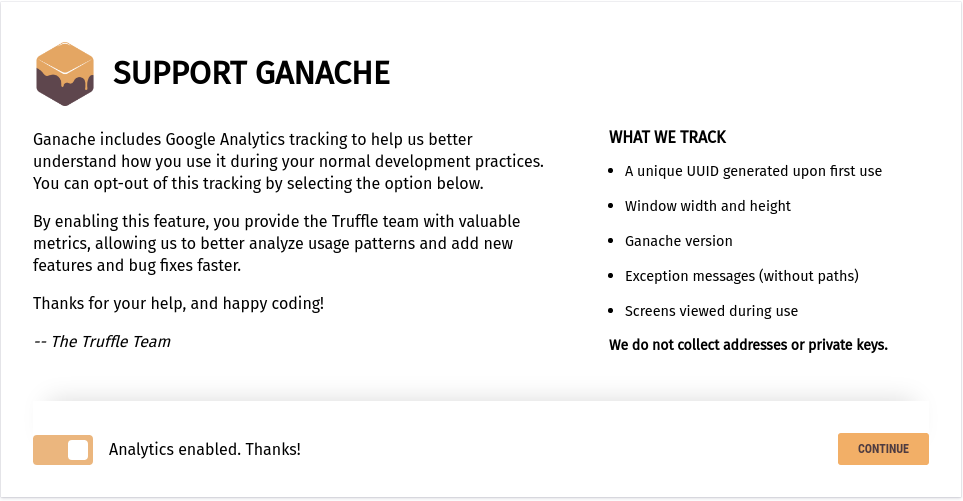

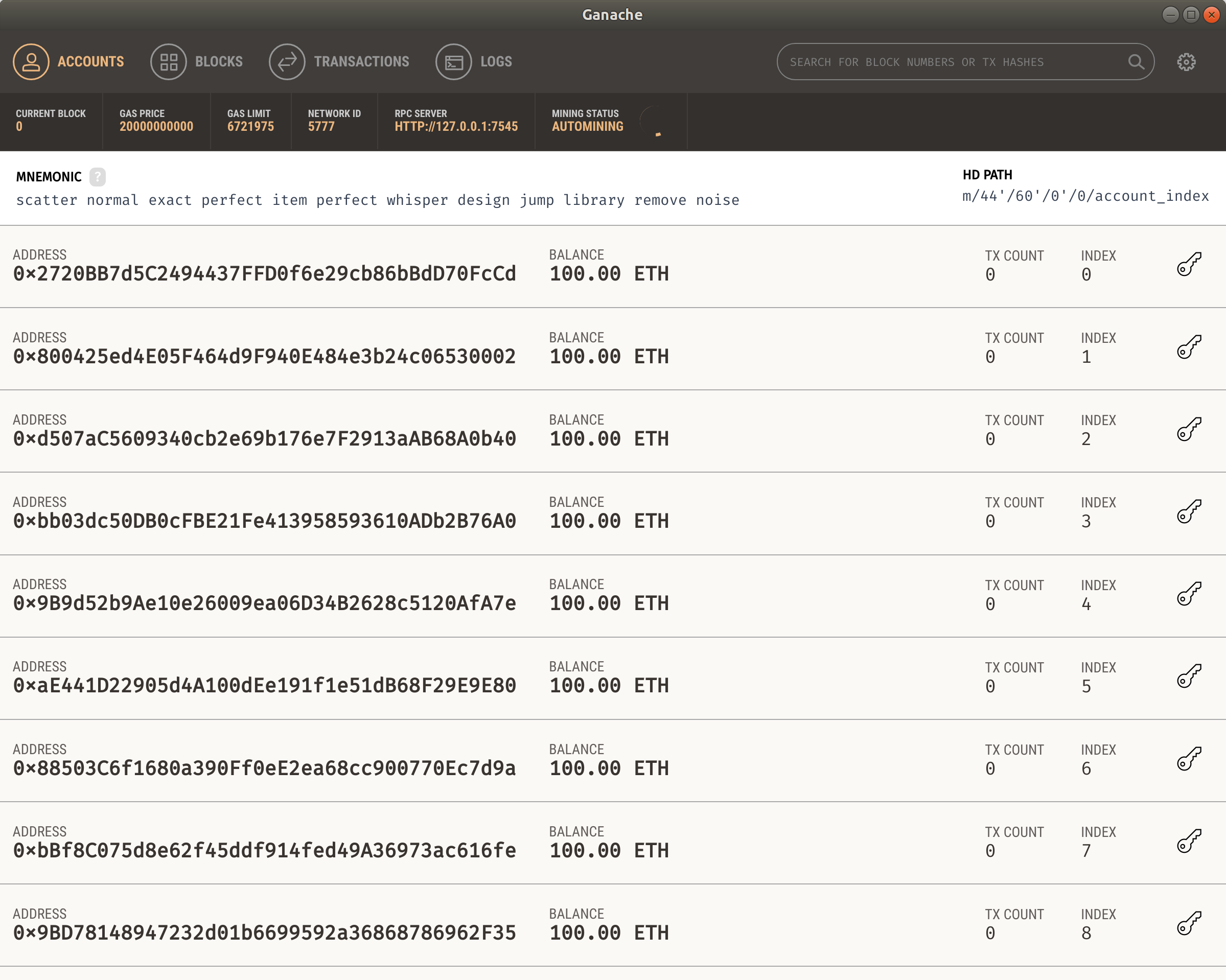

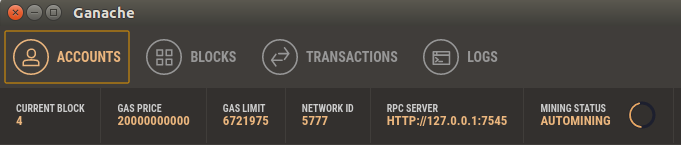

A.2.6. Migration (Deployment & Versioning) & Installation of Ganache (Ethereum Node) 75

A.2.7. Testing the smart contract 81

A.2.9. Writing Tests in JavaScript 82

A.2.10. Interacting with your contracts 83

A.2.11. Package management via EthPM 86

A.2.12. Package management via NPM 86

A.2.13. Debugging your contracts 86

A.2.14. Using Truffle Develop and the console 86

A.2.15. Writing external scripts 86

A.2.16. Using the build pipeline 86

A.2.18. Networks and app deployment 87

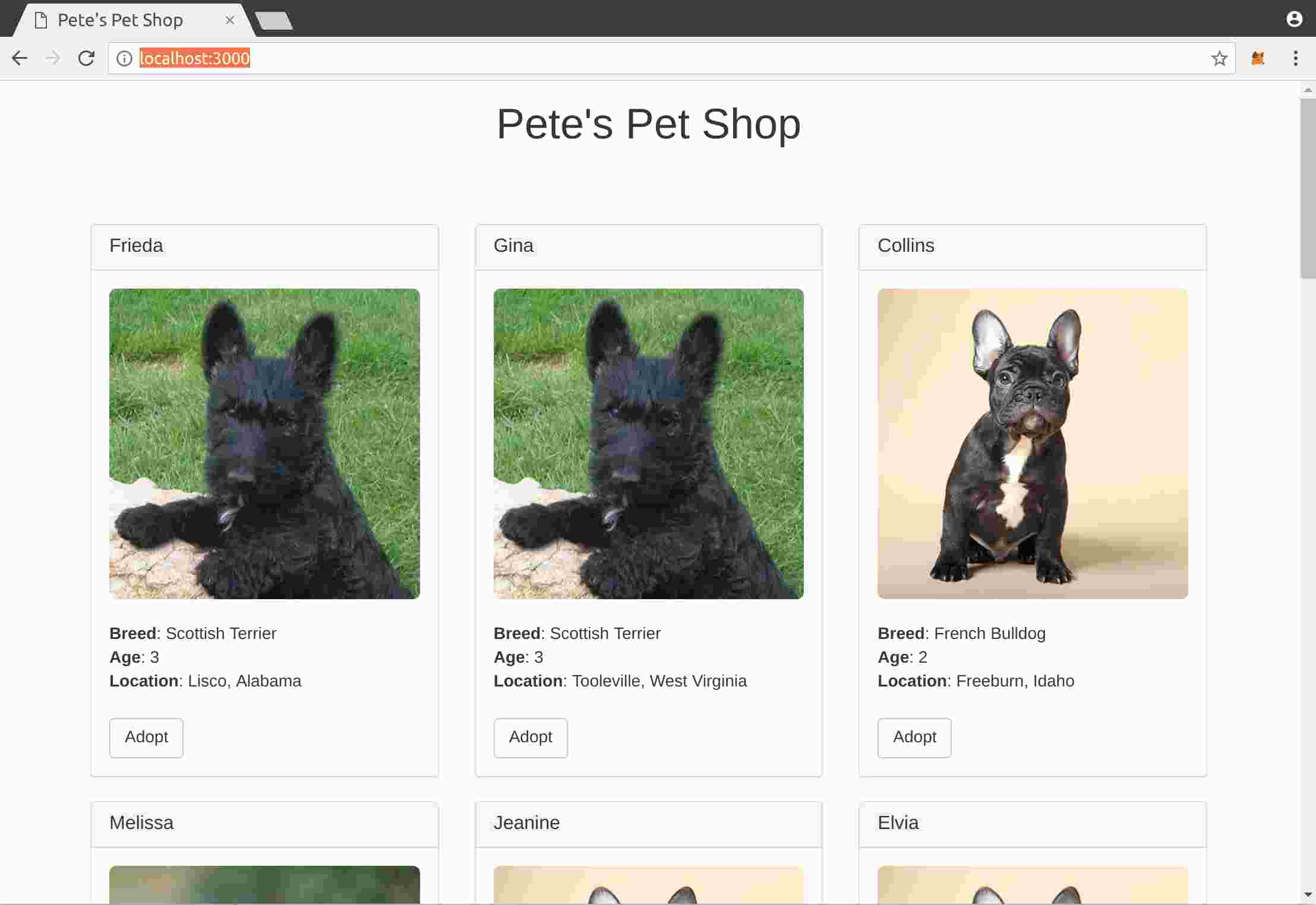

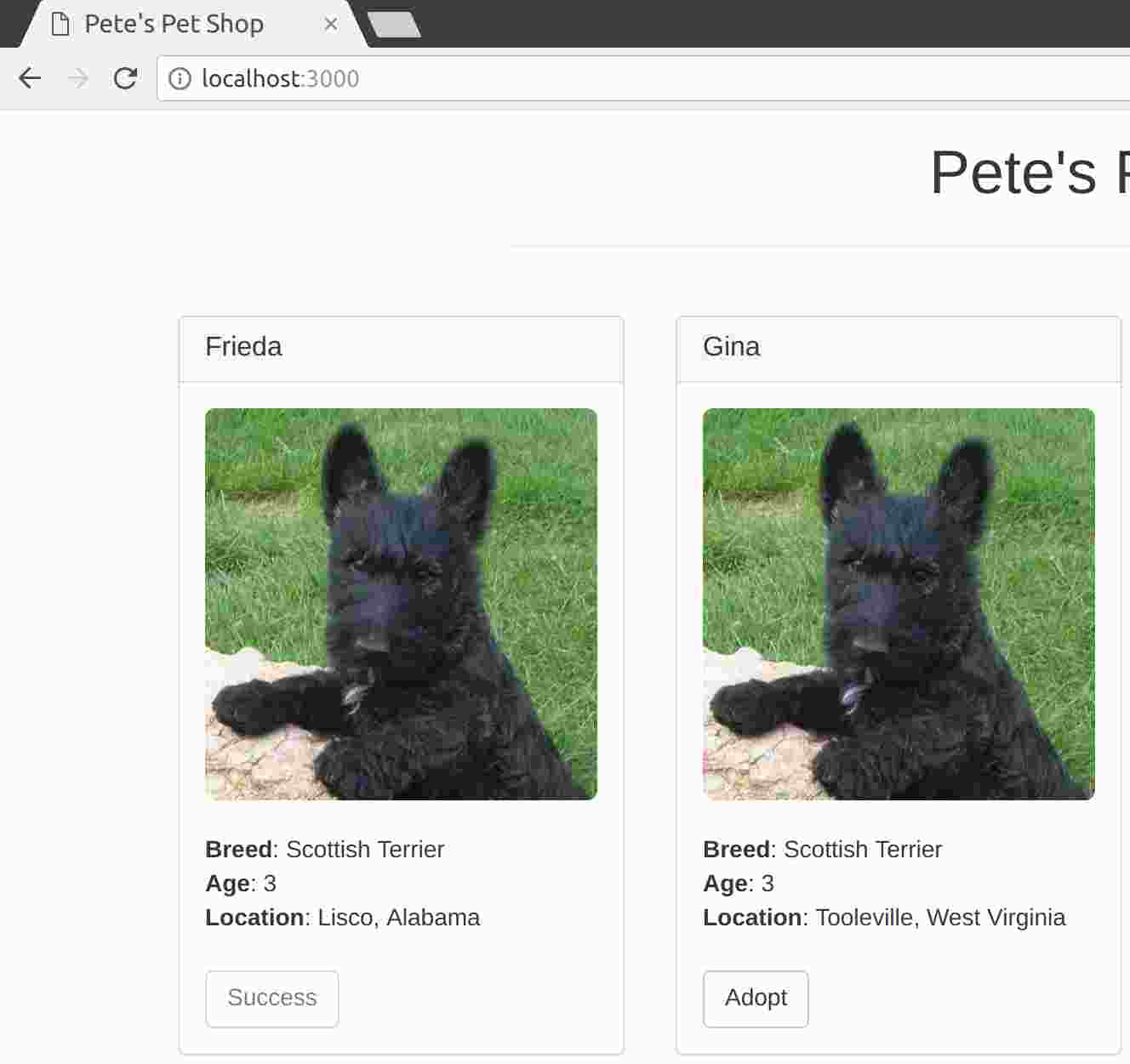

A.2.19. Creating a user interface to interact with the smart contract 87

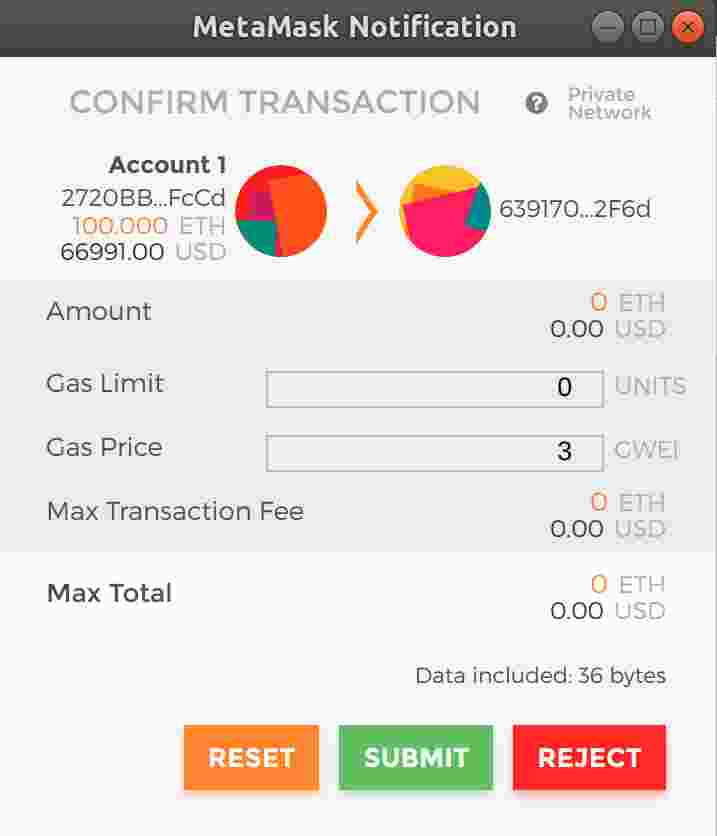

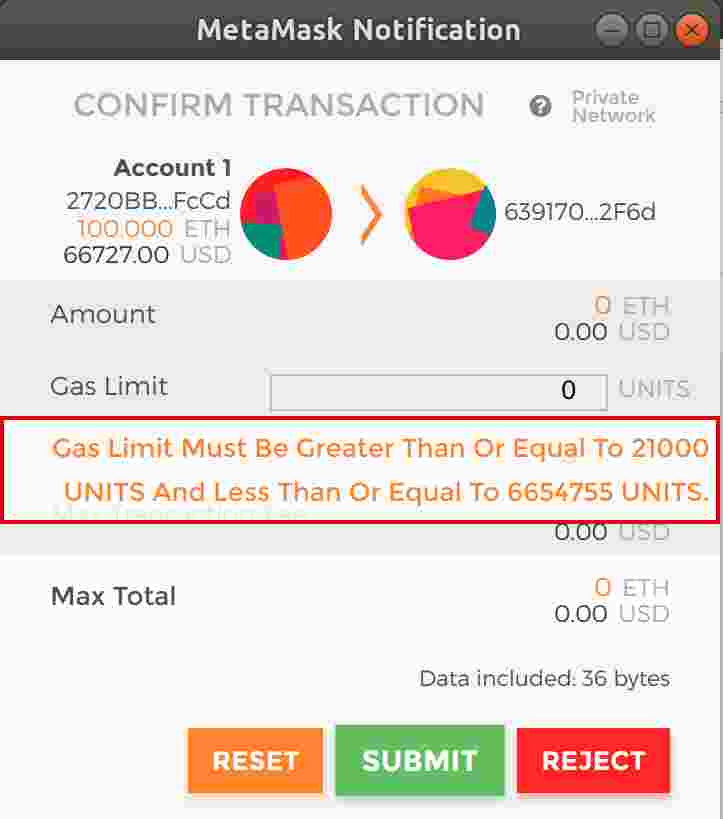

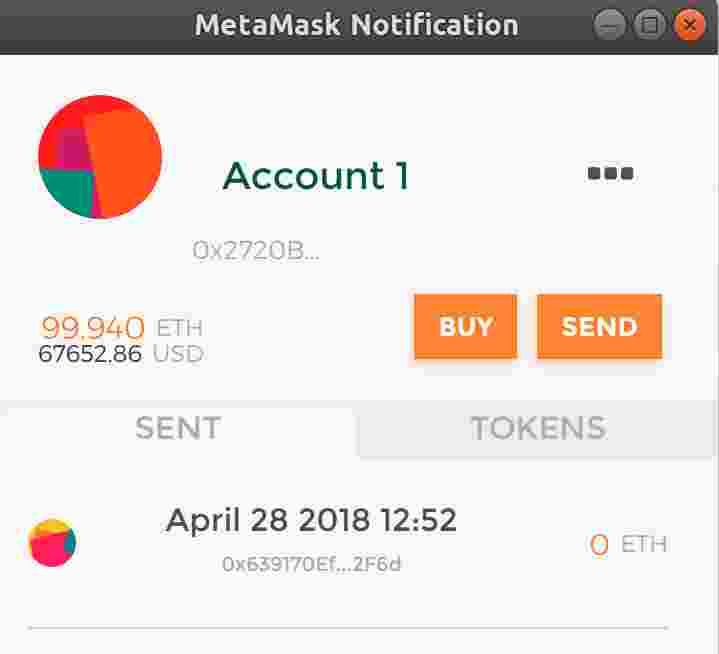

A.2.20. Interacting with the dapp in a browser 90

A.2.21. Installing and configuring lite-server 90

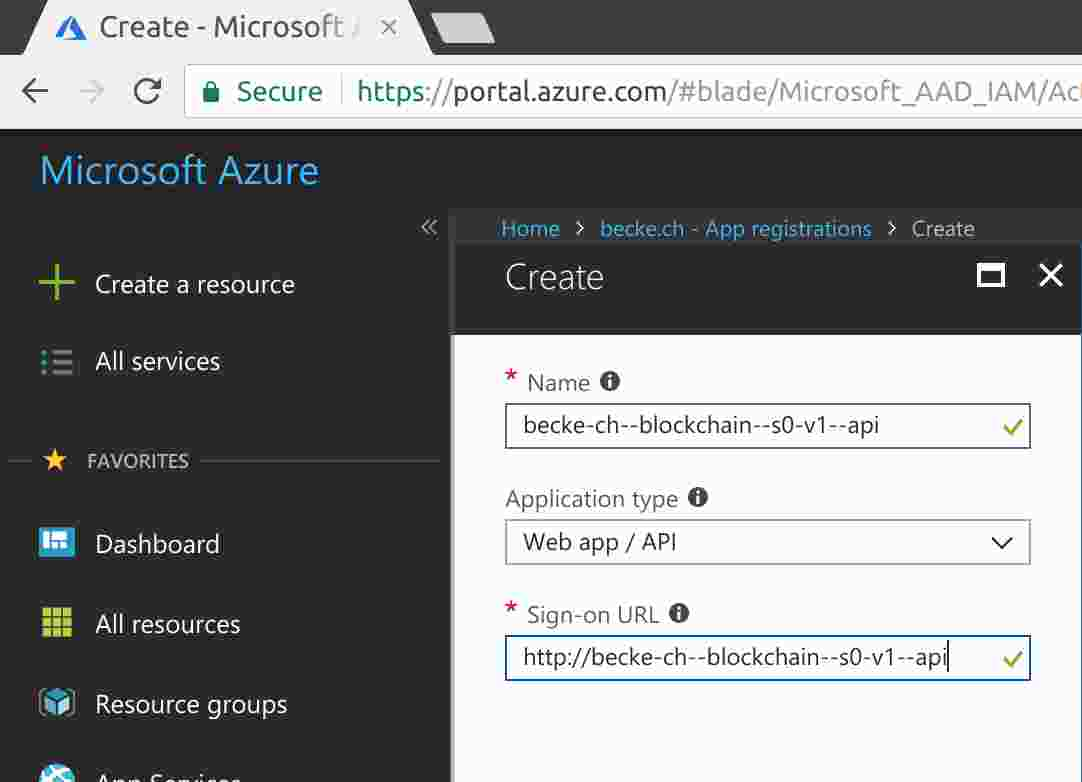

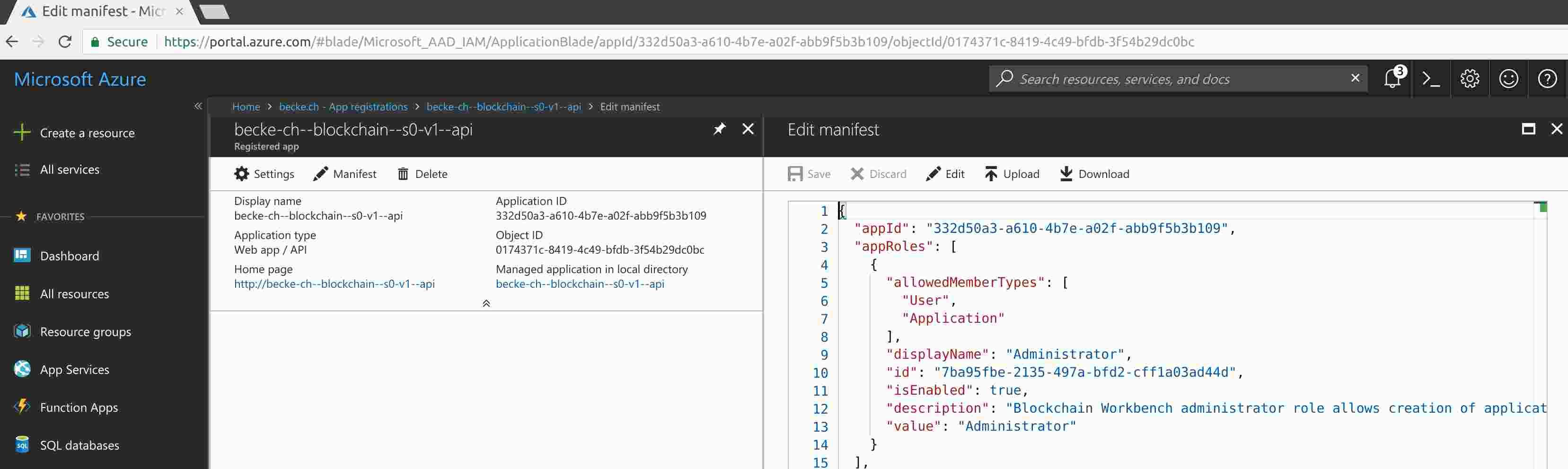

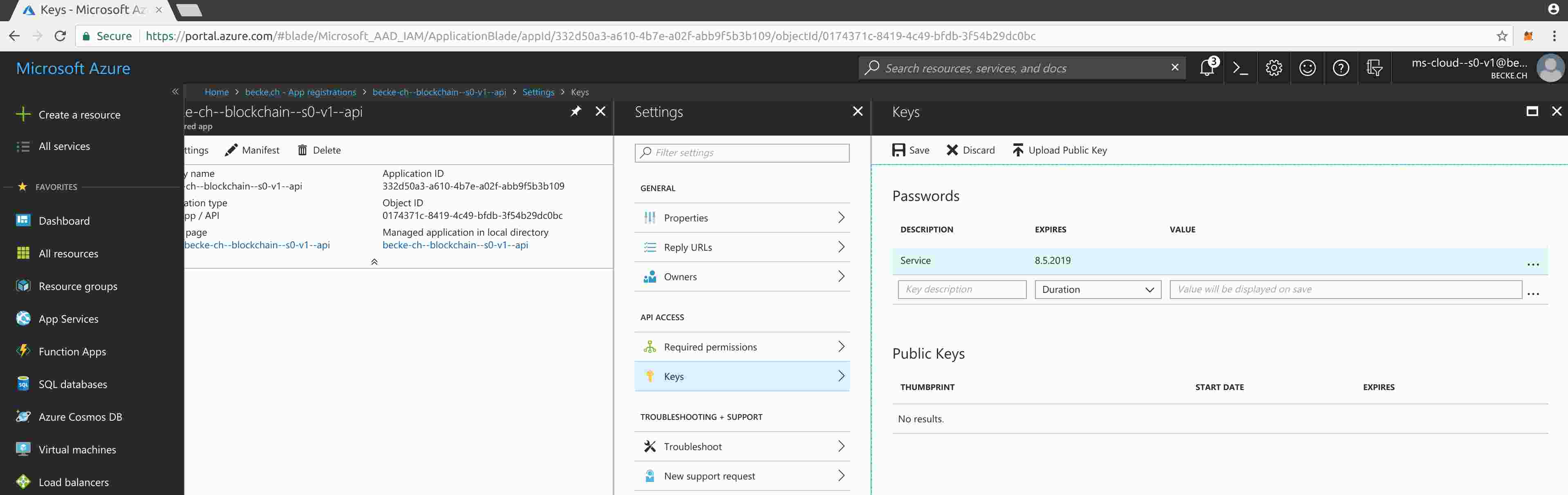

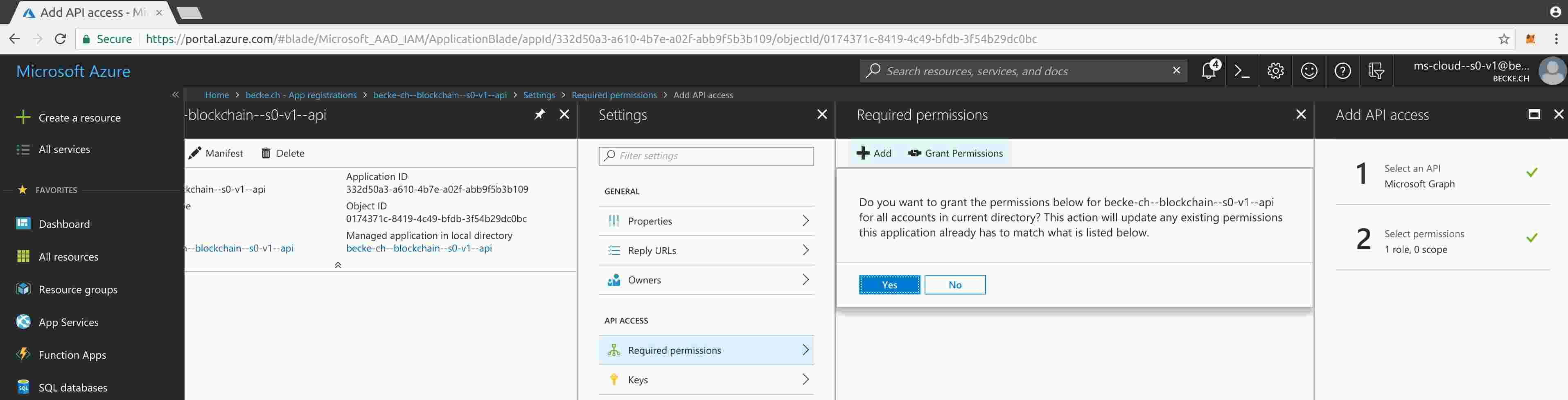

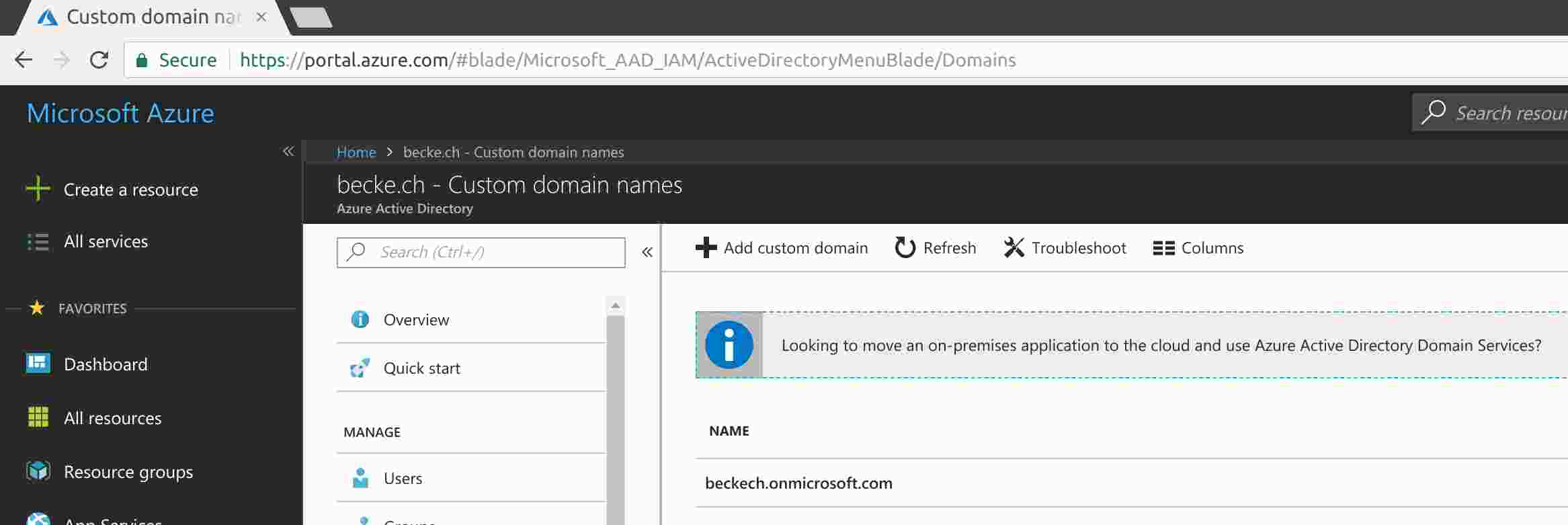

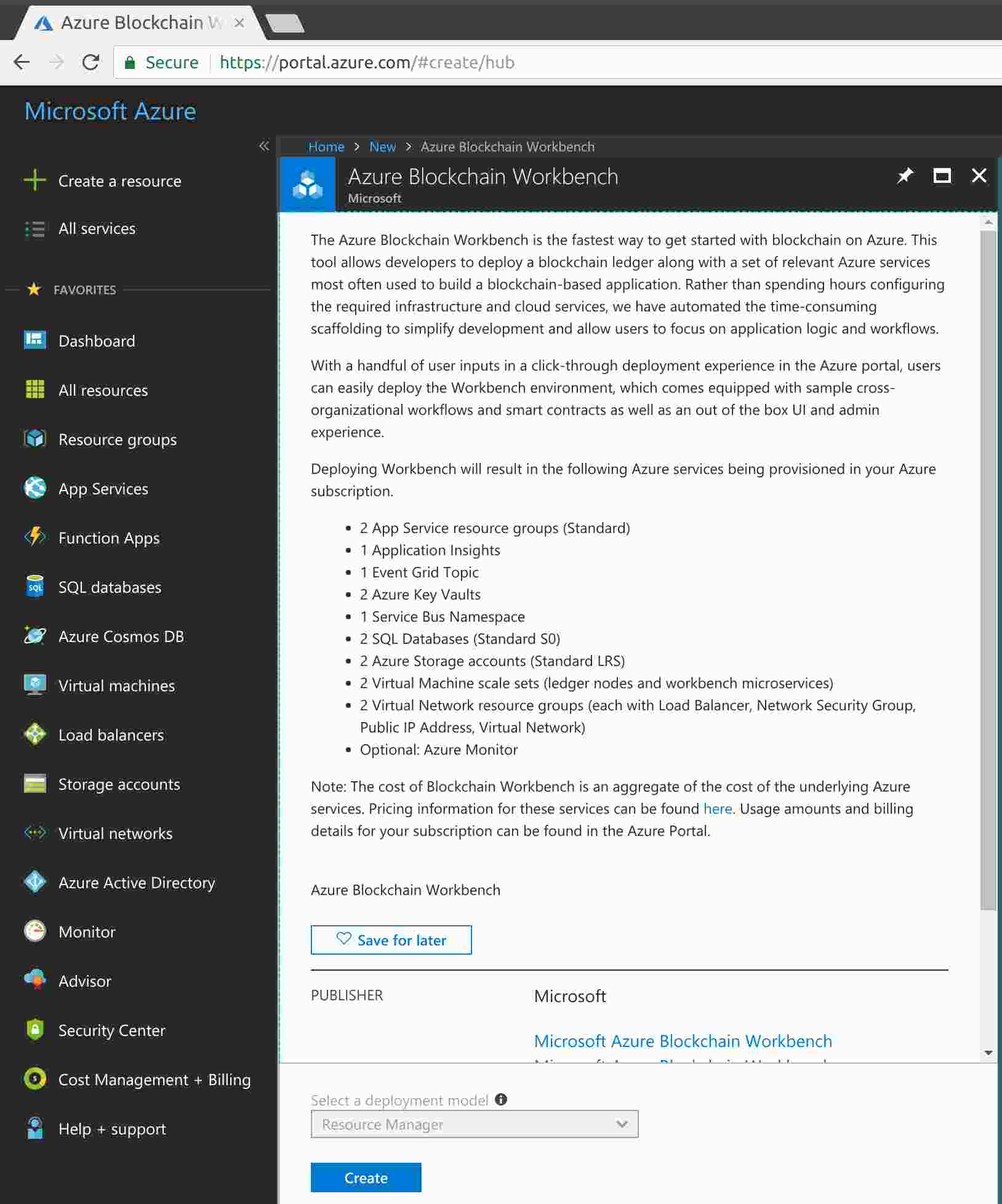

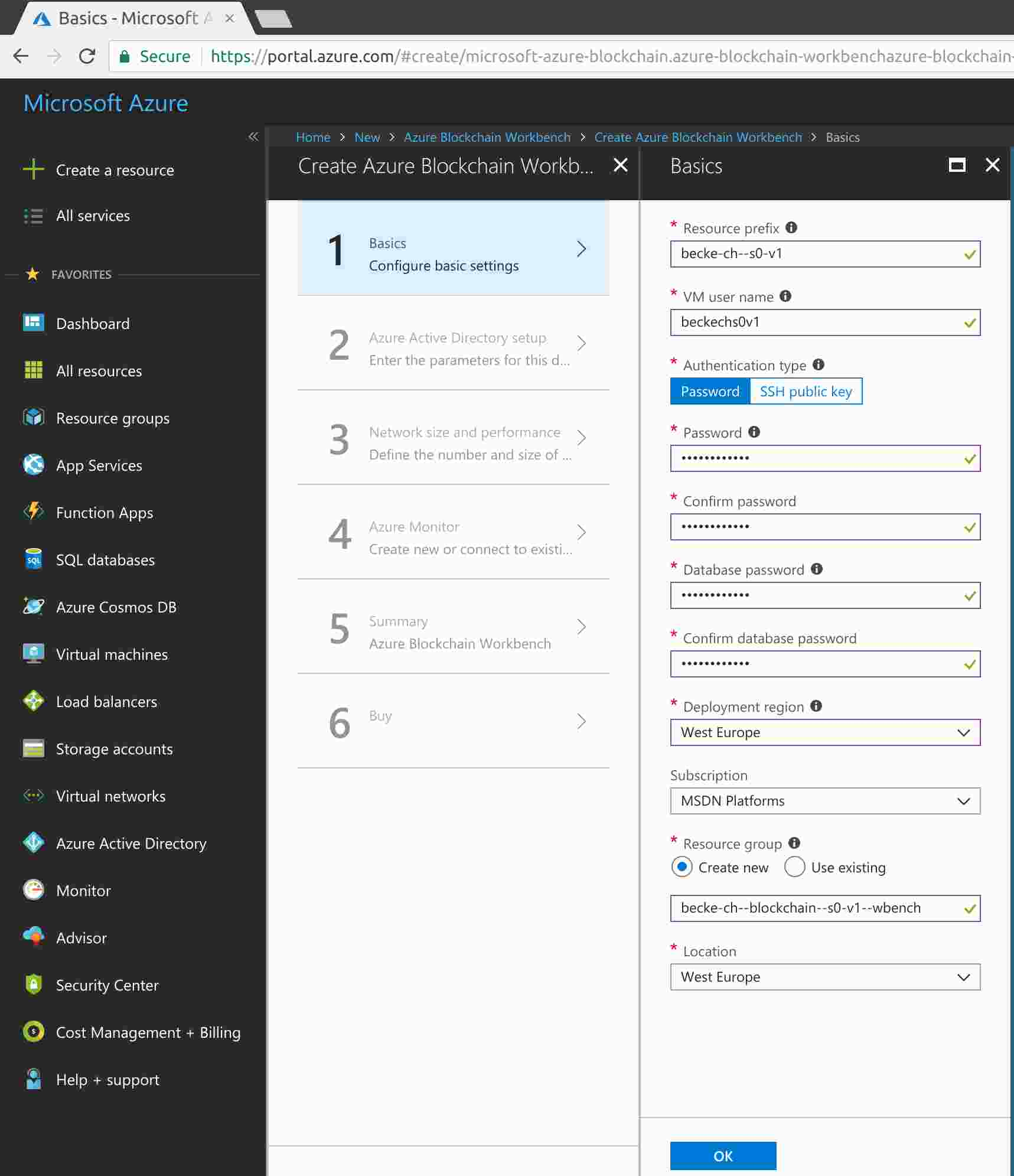

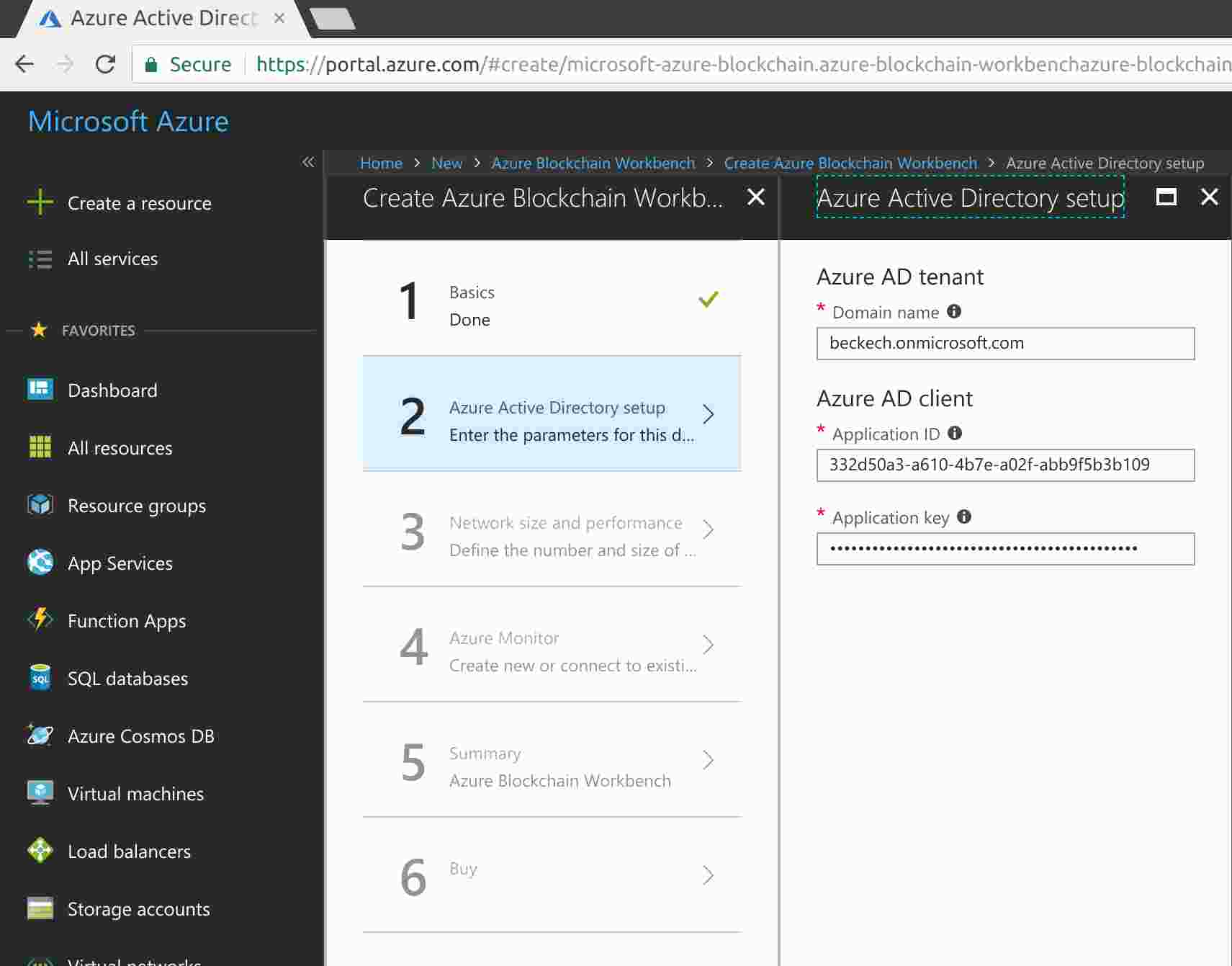

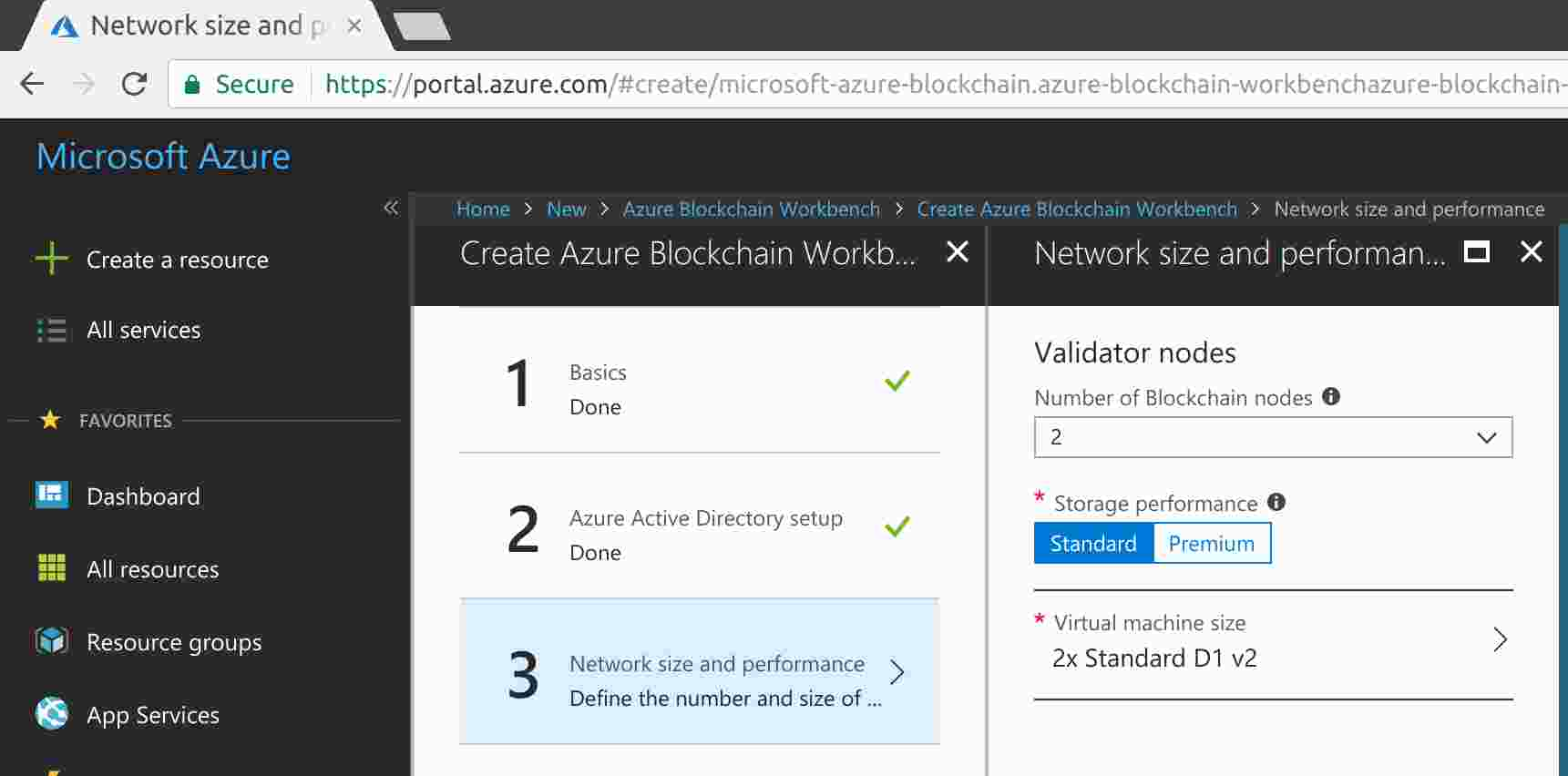

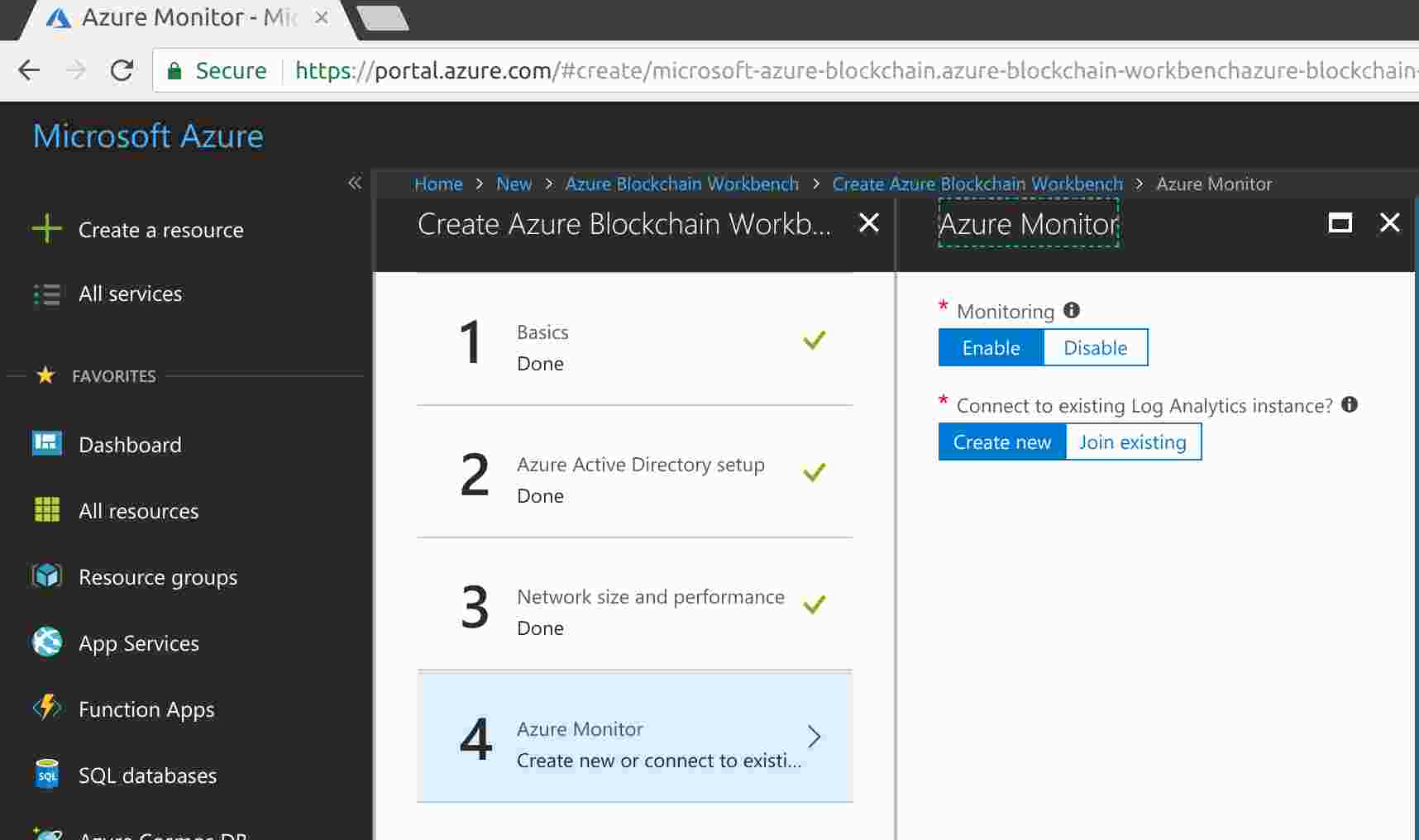

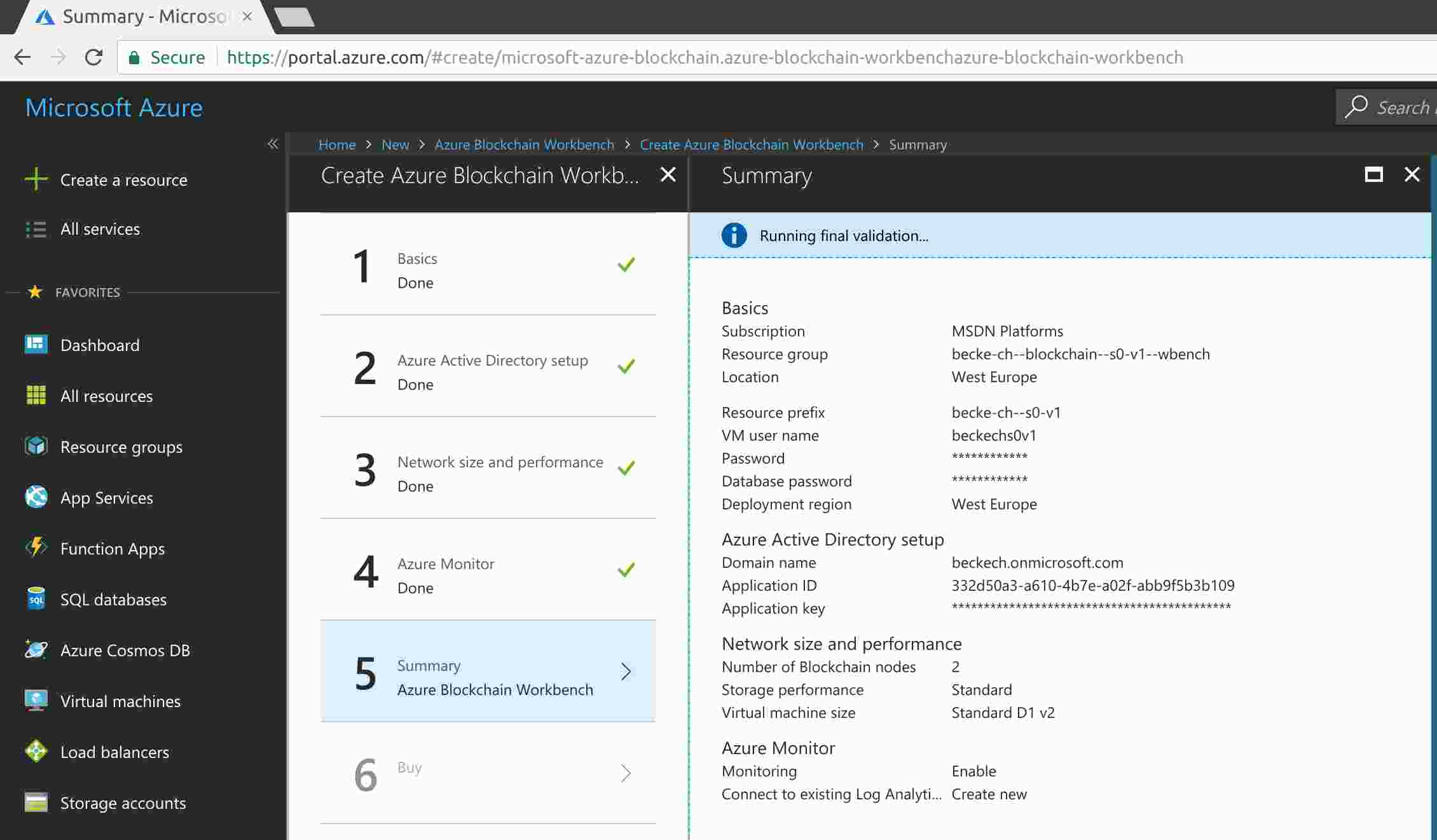

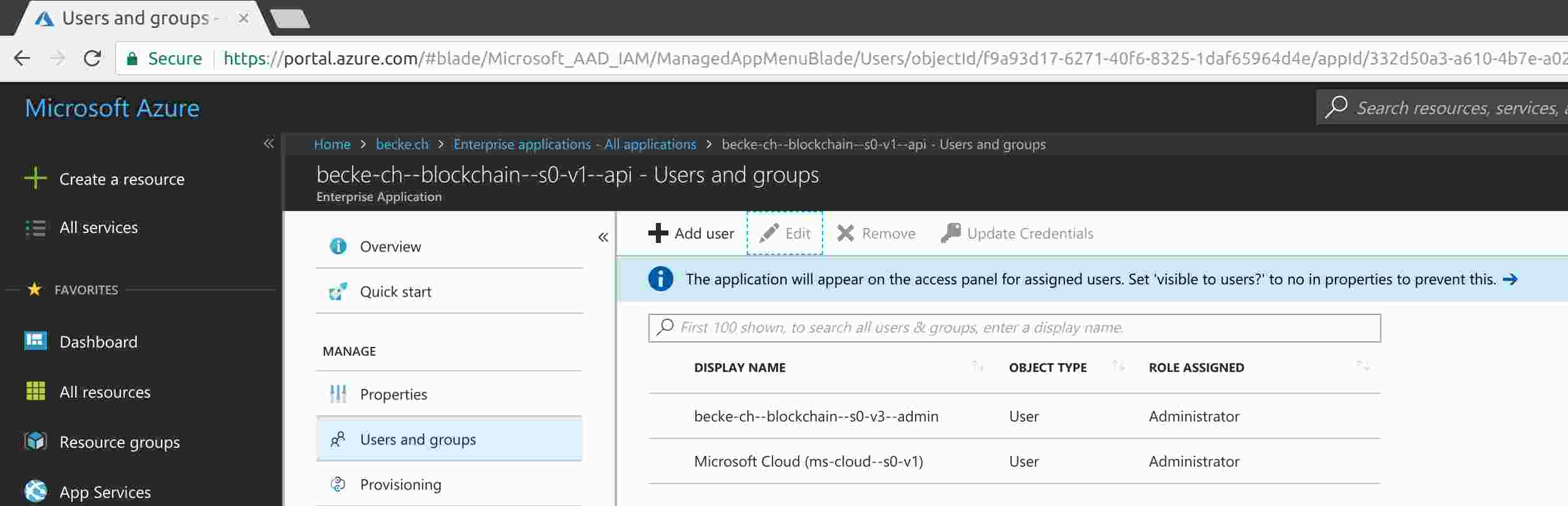

B. Appendix – Azure Blockchain Workbench 96

B.1. Create Blockchain app 102

B.1.2. Smart contract code file 103

B.1.4. Add blockchain application to Blockchain Workbench 104

Illustration Index

Illustration 1: Elimination of Intermediary: Move from Centralized Ledger to Distributed Ledger 8

Illustration 2: Chain of Blocks 8

Illustration 3: Blockchain: Ethereum: Information Flow 12

Illustration 4: Blockchain: Ethereum: Interfaces 13

Illustration 5: Soft- versus Hard-Fork 14

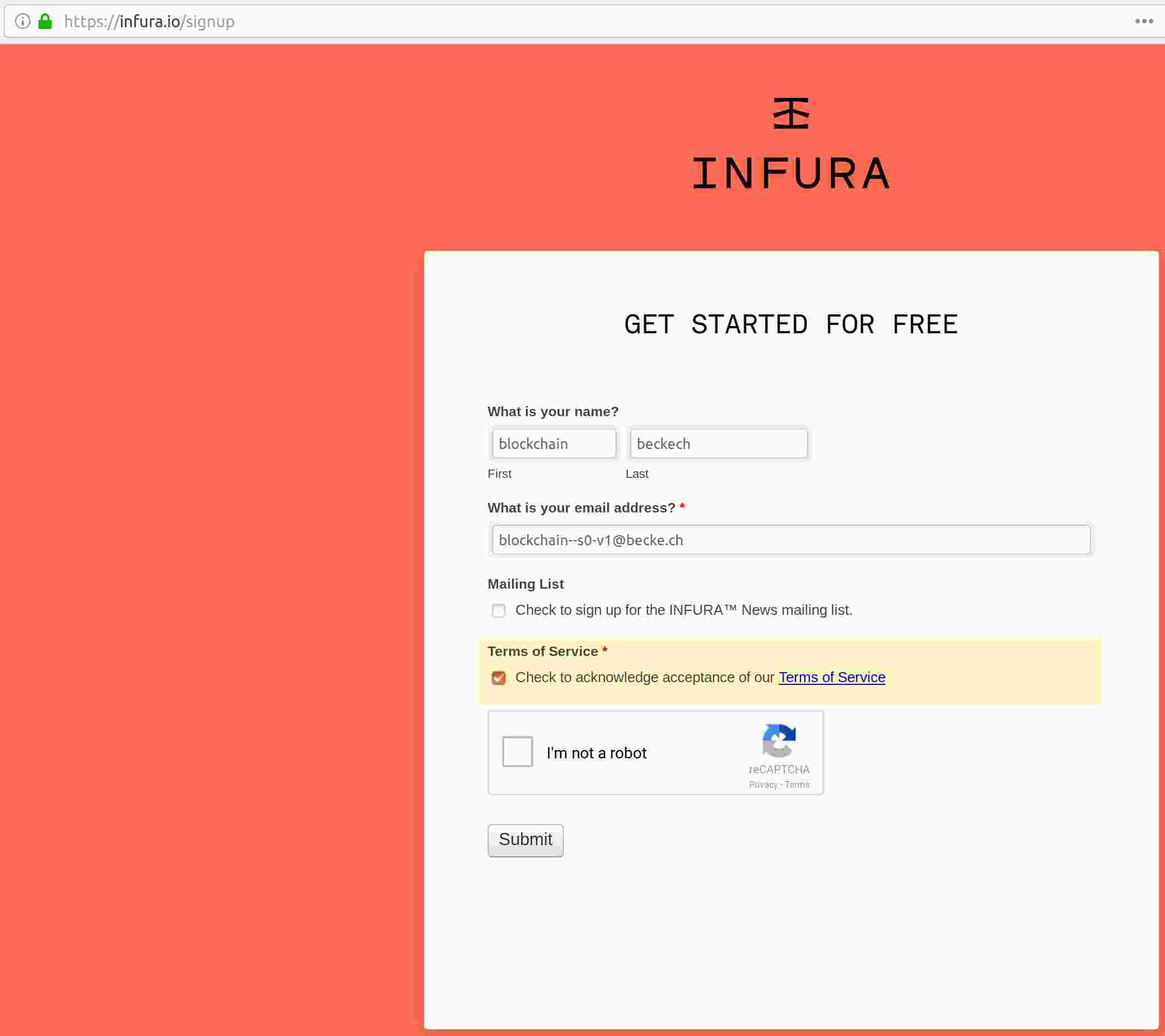

Illustration 6: Infura: Sign-Up 34

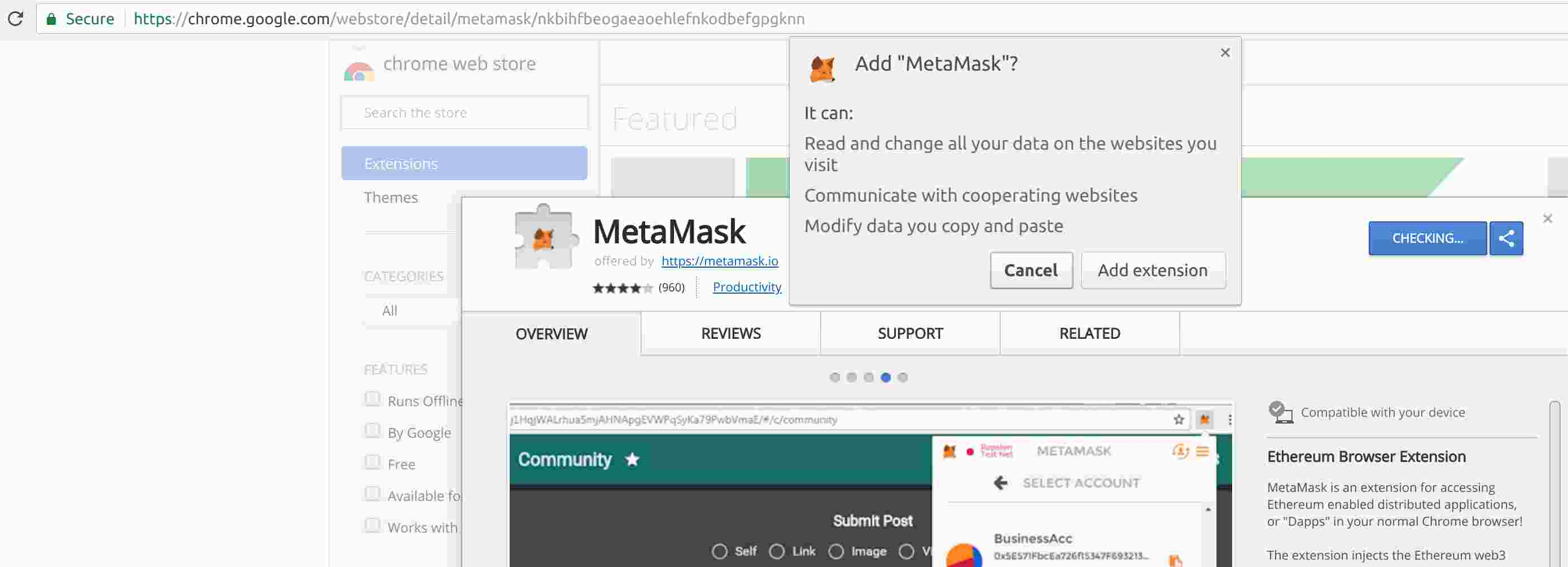

Illustration 7: MetaMask: Add Chrome Extension 36

Illustration 8: MetaMask: Browser Icon 36

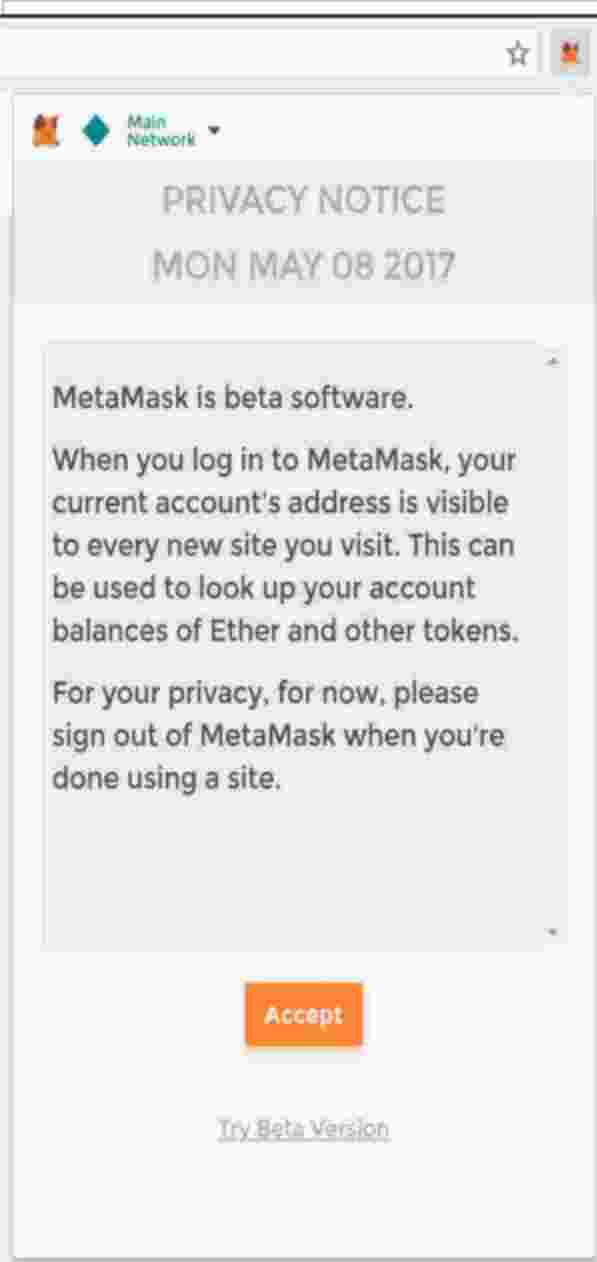

Illustration 9: MetaMask: Privacy Notice 37

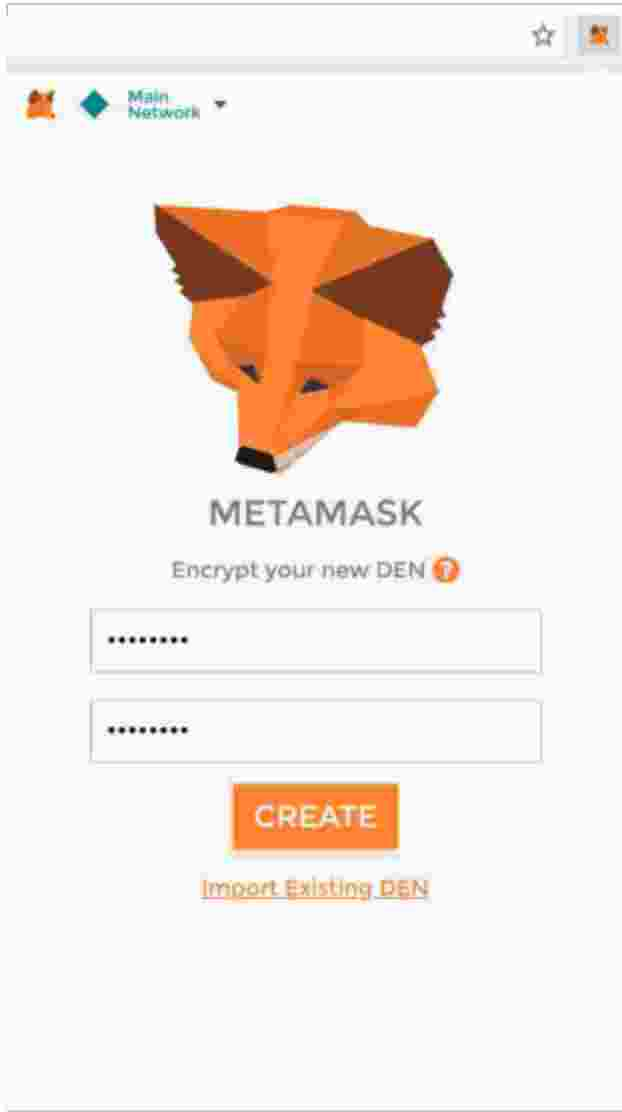

Illustration 10: MetaMask: Create DEN (HD Wallet) 38

Illustration 11: MetaMask: Mnemonic 38

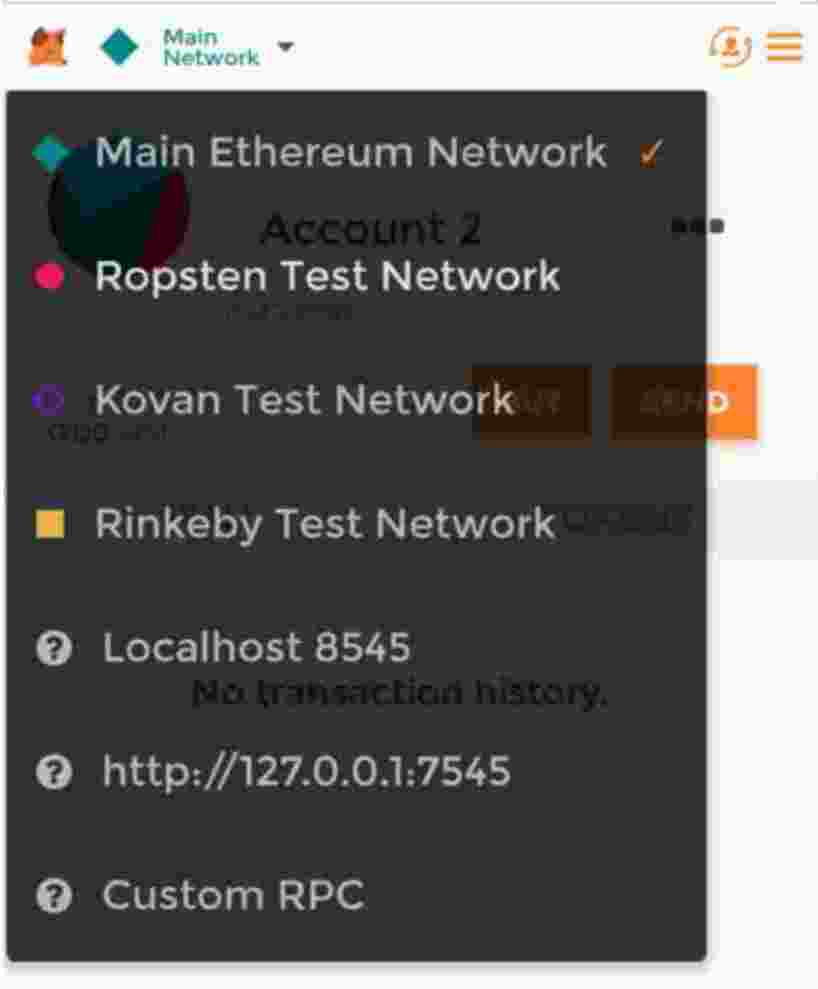

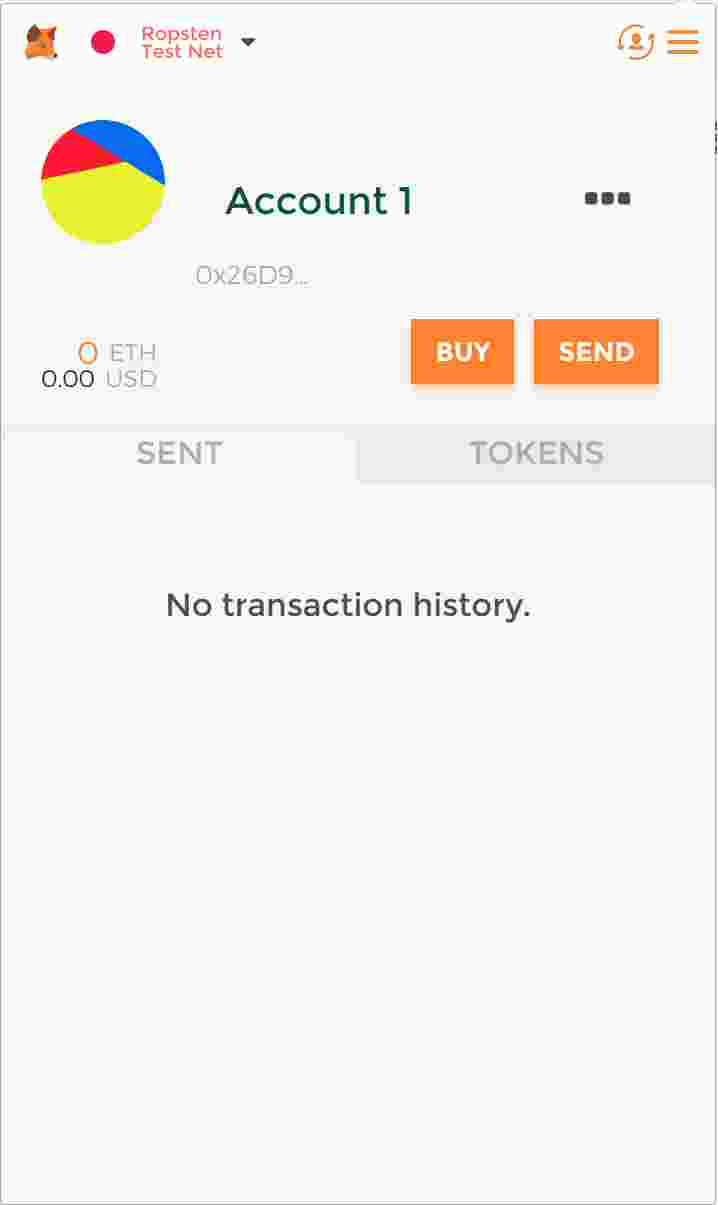

Illustration 12: Testnetwork: Ropsten: Metamask: Create Account 40

Illustration 13: Testnetwork: Ropsten: Metamask: Account 40

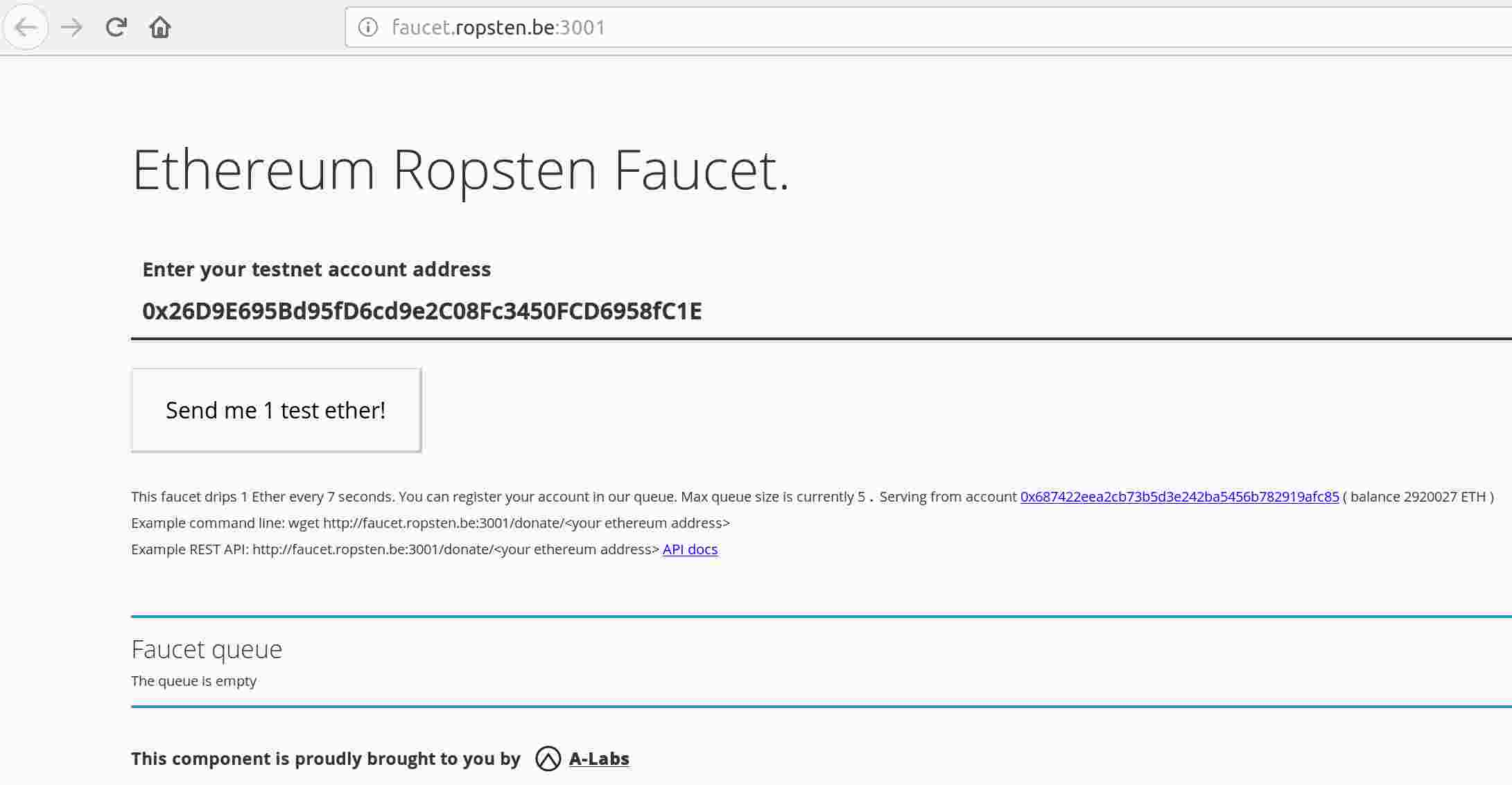

Illustration 14: Testnetwork: Ropsten: Faucet 41

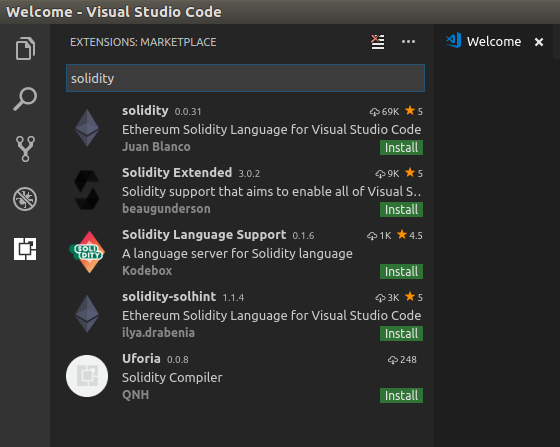

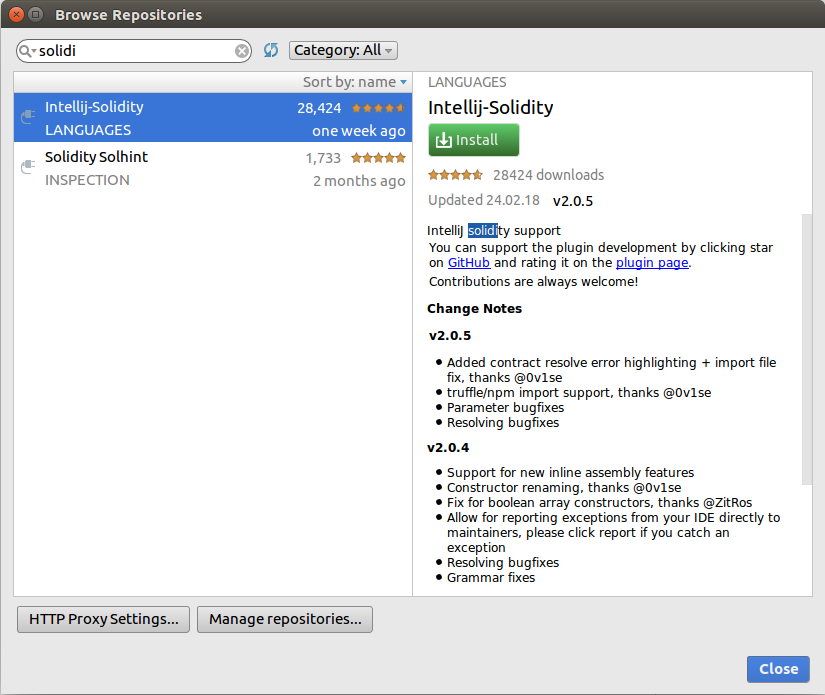

Illustration 15: Solidity Extension 62

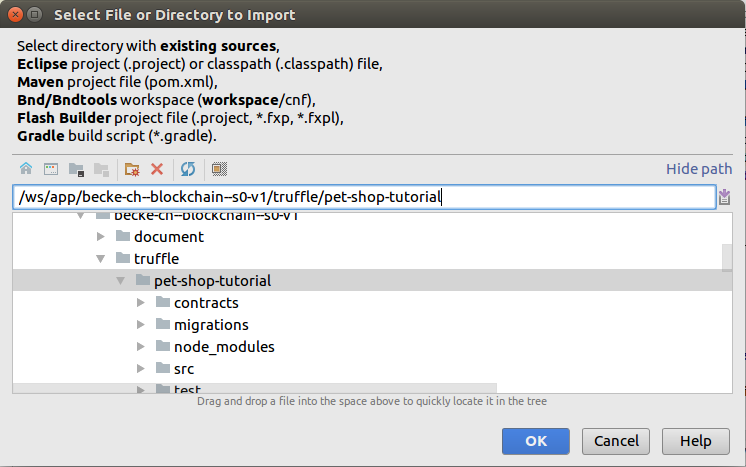

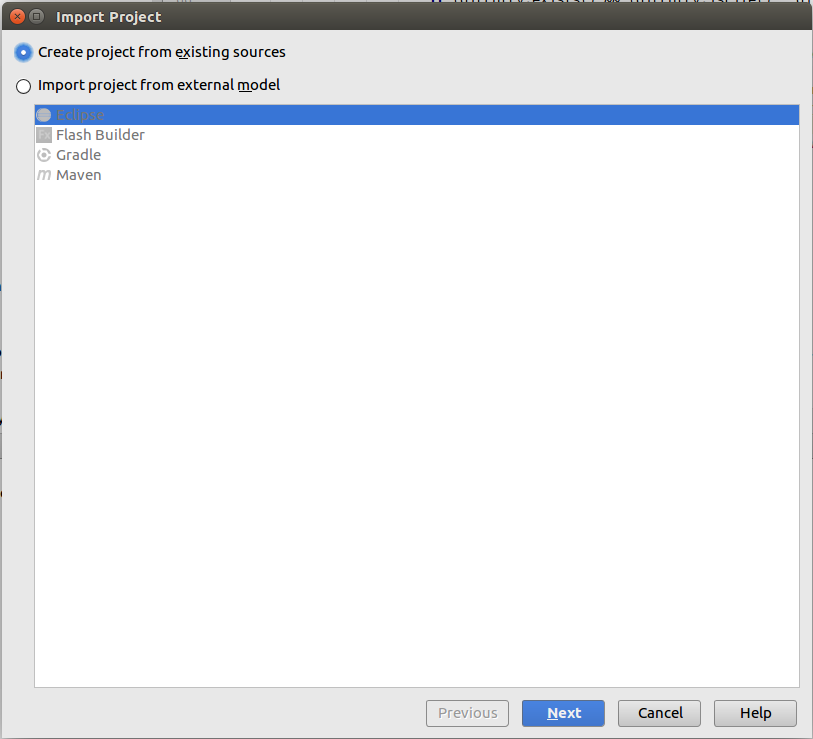

Illustration 16: IntelliJ: New project from existing sources 63

Illustration 17: IntelliJ: Create project from existing resource 64

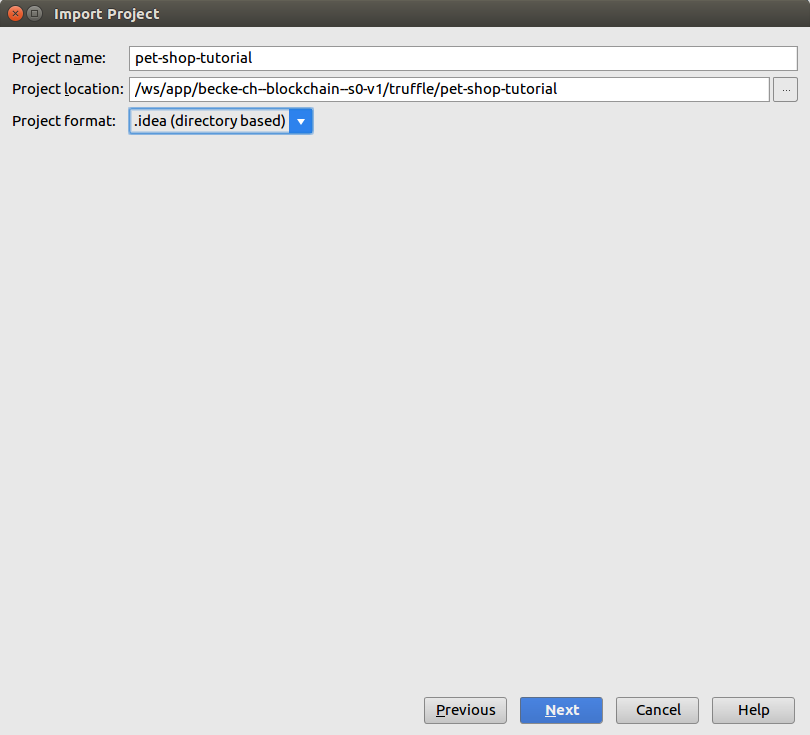

Illustration 18: IntelliJ: Project setting 64

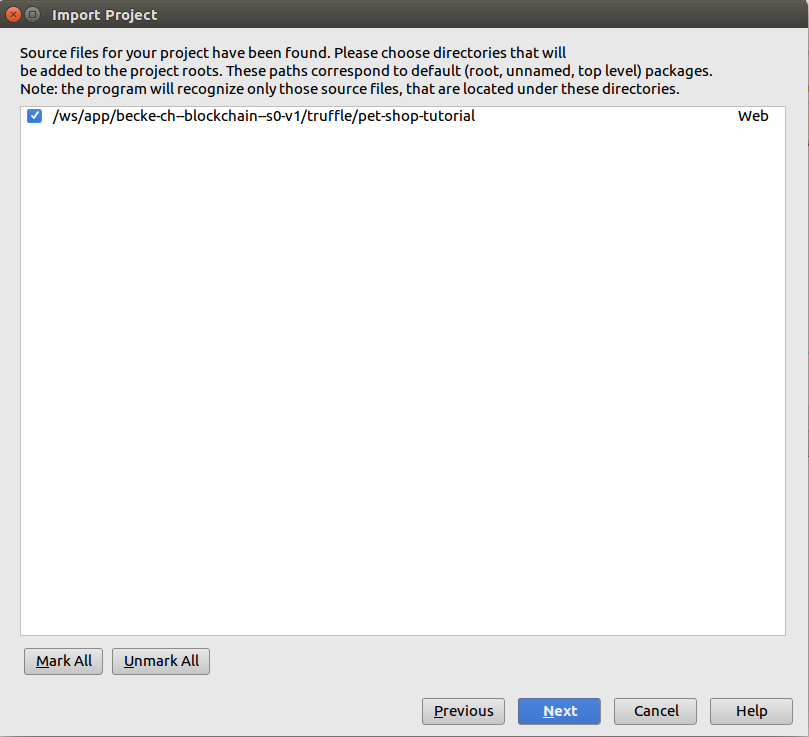

Illustration 19: IntelliJ: Import Source Files 65

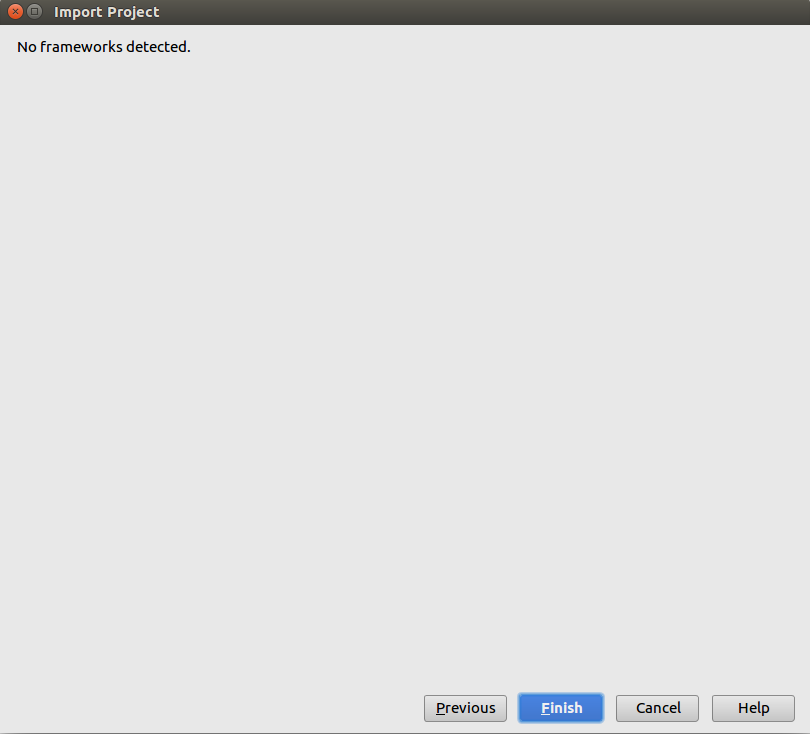

Illustration 20: IntelliJ: No Frameworks Detected 65

Illustration 21: Intellij-Solidity Plug-In 66

Index of Tables

Table 1: References 71

Table 2: Glossary 71

«Elimination» Intermediary: Centralized Ledger -> Distributed Ledger: One of the main drivers for blockchain is the “elimination” of the intermediary e.g. bank and moving from a centralized- to a distributed-ledger approach where the ledger is not hosted on a central node but instead on all nodes participating in the network. There exist different reasons to do so: I don’t have access to the intermediary, I don’t have trust in the intermediary, the fees of the intermediary are too high, the transaction handling of the intermediary is too slow, etc. On the cons side with the elimination of the intermediary as well some regulatory aspects and expectations enforced by the intermediary are eliminated like for example the know your customer principle in the context of the prevention of money laundering act.

Illustration

1: Elimination of Intermediary: Move from Centralized Ledger to

Distributed Ledger

Illustration

1: Elimination of Intermediary: Move from Centralized Ledger to

Distributed Ledger

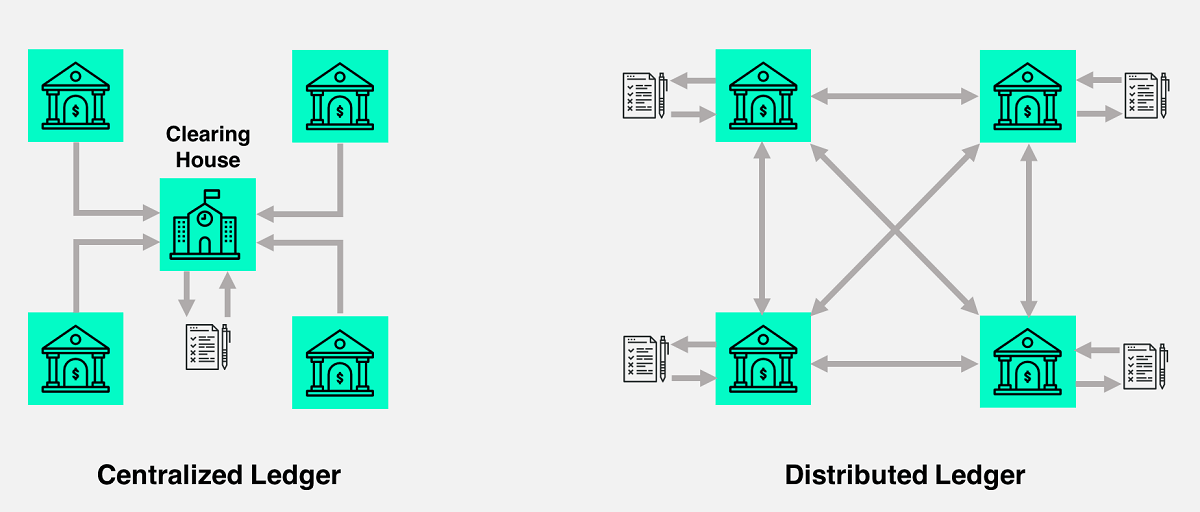

Ledger -> Blockchain: The

ledger is a (time sorted) list of transactions and at a time when a

certain number of transactions respective the current block size

limit has been reached these transactions are put into a block1.

This block is then chained with the previous block by

writing the address respective hash-value of the

previous block into the current block and last but not least the

transactions in the block are secured by calculating the hash-value

of the contained transactions and persisting this value as well in

the current block. The hash value guarantees that the transactions

and previous block cannot be tampered with otherwise the hash value

is not valid anymore. The resulting chain is secure and

immutable.

Blocks are created aka mined and the hash values

calculated by special nodes in the network called mining nodes.

Because there exist a lot of nodes that can hand in new transactions

into the network and because there exist a lot of mining nodes it can

happen that several blocks, containing different set of transactions,

are created at the same time and the chain is getting forked. A fork

can as well happen when some part of the network and the nodes

contained in this network segment are getting separated from the

others. But in the end, after some time the chain focuses again on a

single branch, namely the longest where most of the nodes are working

on. The longest in this context is the branch respective chain where

most of the cumulative effort in terms of block hash calculation was

invested in. Due to this behavior one should wait until the block

containing the transaction has reached a certain depth before

shipping the goods related to this transaction to make sure the

transaction is not on a temporary branch that is getting reverted.

Illustration

2: Chain of Blocks

Illustration

2: Chain of Blocks

Transaction: A transaction contains: the from- respective sender-address2, the to- respective receiver-address, the amount that should be transferred, the transaction-fee the miner gets as reward when mining this transaction and optional some data respective code in case this transaction runs in the context of smart contracts. And last but not least the transaction is signed with the private-key of the sender to secure the transaction and making sure that nobody can modify the content (e.g. amount or receiver) of the transaction.

Network: A network consists of regular-nodes and mining-nodes. There exist separate networks for the different blockchain technologies e.g. bitcoin and ethereum and within the different technologies there exist further separate networks for different purposes e.g.: production network (the official public network where money/coins are traded), networks for testing and even different networks for private or consortium purpose.

Cryptocurrency: Cryptocurrency is the term for the currency that is used in the context of blockchain when transferring assets (money/coins) within a transaction. A cryptocurrency is bound to a blockchain i.e. cannot be used across different blockchains3 and the value of the currency grows with the number of participants, transactions and goods that are exchanged and of course with the supply and demand. The two most famous cryptocurrencies are: bitcoin and ether.

Coins versus Tokens: Both are cryptocurrencies, but while a coin – Bitcoin, Litecoin, Dogecoin – operates on its own blockchain, a token lives on top of an existing blockchain infrastructure like Ethereum. Coins can be created by starting a new blockchain network (and e.g. giving it the name of your coin) or hard forking an existing blockchain by modifying the underlying software and/or configuration (see chapter 2.1 and e.g. giving the fork the name of your coin). Tokens are created respective traded using smart contracts (see below) which manage the exchange of assets (e.g. a concert ticket / token) for coins.

Consensus Algorithm: To calculate the hash value in a block there exist different approaches aka consensus algorithms used in different networks. The most famous algorithm that is used in the public bitcoin and ethereum network is the proof-of-work algorithm. In the proof-of-work algorithm the difficulty of the algorithm is permanently adjusted to make sure that an average of 6 blocks per hour are getting mined and to make sure that the chain is not spammed with transactions (besides that every transaction costs coins). In private and consortium networks where the participant nodes are known and trusted, the proof-of-authority algorithm is normally getting used. In these networks mining- aka authority-nodes are only added to the network if they are trusted by the other miners. Further consensus algorithms we will not go into are: proof-of-stake, proof-of-burn, proof-of-activity.

Wallet: A wallet stores the public- and private-keys of a participant, is connected to a node and hence meets the conditions to create and participate in transactions. Loosing a wallet means loosing all coins because the private-key to create and sign transactions and thus spending coins belonging to this account (public-key) is lost. And therefore a wallet respective its public and private keys should always be backed up!

Smart-Contract: A smart-contract is a piece

of code that runs in the network, has a public-key and can therefore

participate in transactions and accordingly receive and spend coins.

Furthermore a smart contract exposes an interface (ABI: Application

Binary Interface) whose methods can be invoked in the context of a

transaction.

A smart contract has/needs no private-key because

the smart-contract code and virtual machine (bitcoin, ethereum, etc.)

are carefully defined and fully transparent (source code available)

and therefore everyone will always agree on what the outcome of the

operation was. This includes whether or not the contract sent a

transaction to any other contract. Because everyone can agree on the

origin of the message, no further degree of verification (such as

that provided by a signature) is necessary.

Dapps Decentralized Application:

Dapps are programs that run on top of smart contracts and communicate

with them via their exposed ABI in the context of a transaction.

When we compare blockchain to a database and smart-contracts to

stored procedures then Dapps are the applications that run on top of

the stored procedures.

Pros and Cons: Some pros and cons of using blockchain have already been listed in the beginning of this chapter “«Elimination» Intermediary”. For a “full” list of pros and cons see [4]. But two arguments that were not listed I want to discuss here:

Pros: Transparency: One of the pros of the blockchain is that everyone posses the ledger and can therefore see all the transactions and transfers that were made. BUT even if someone can see all the transactions that were made he still cannot see the true identity of the person behind the account that participated in the transaction, which gives therefore leeway to illegal activities respective transactions.

Cons: Energy: Due to the proof-of-work consensus algorithm a lot of computing power and energy is spent to compute the hash value of a block. This becomes a growing pollution of the environment and therefore wherever possible a different consensus algorithm should be used.

Funny: Blockchain & Cryptocurrency explained see [13].

The remainder of this document (starting with chapter 3) gives an introduction on blockchain technology with focus on the ethereum implementation.

In red font are the different issues the blockchain exposes and risks you need to consider.

In yellow highlighted are keywords, definitions and aspects that require your attention.

The architecture overview is based on ethereum but conceptual identical to other blockchain implementations.

An Ethereum node can be addressed using the URL scheme "enode" notation.

The hexadecimal node ID is encoded in the username portion of the URL, separated from the host by an @ sign. The hostname can only be given as an IP address, DNS domain names are not allowed. The port in the host name section is the TCP listening port. If the TCP and UDP (discovery) ports differ, the UDP port is specified as query parameter "discport".

In the following example, the node URL describes a node with IP address 10.3.58.6, TCP listening port 30303 and UDP discovery port 30301.

enode://6f8a80d14311c39f35f516fa664deaaaa13e85b2f7493f37f6144d86991ec012937307647bd3b9a82abe2974e1407241d54947bbb39763a4cac9f77166ad92a0@10.3.58.6:30303?discport=30301

The enode url scheme is used by the Node discovery protocol and can be used in the bootnodes command line option of the client.

More information regarding the node address format can be found in [26].

Ethereum nodes use a peer-to-peer communication - listening (TCP) port and a discovery (UDP) port, both on 30303 by default.

peer-to-peer communication: Peer-to-peer communications between nodes running Ethereum/Whisper/&c. clients run using the underlying ÐΞVp2p Wire Protocol. ÐΞVp2p nodes communicate by sending messages using RLPx (Recursive Length Prefix), an encrypted and authenticated transport protocol.

discovery (UDP): ÐΞVp2p nodes find

peers through the RLPx discovery protocol DHT (Distributed Hash

Table) – an UDP-based RPC protocol for decentralized peer-to-peer

computer networks

Ethereum is “pseudo”

decentralized because the first

time a node connects to the chain it needs a bootnode

telling him which peers he can connect to. From this point on the

node directly connects to and synchronizes with his peers, performs

node discovery with his peers and does not need the bootnode

anymore. Bootstrap nodes maintain a list of all nodes that connected

to them in a period of time. Bootnodes

are hardcoded into the source code

of the chain but can be overridden by configuration. Instead

of using bootnodes it is possible to

provide (in private/consortium chains) a list of static/trusted

nodes to connect to.

Further information as well regarding

“bootnodes (blockchain)

being down”

due to memory bug attack

see [33]!

Dynamic

IP addresses are supported as long

as at least the boot-node or one of its peers is still reachable and

can be updated with the new changed IP. From

there the information is distributed to the other peers.

Additional information regarding the different protocols can be found in [27].

Light Clients & Light Ethereum Subprotocol (LES): Work in progress: LES is a sub-protocol of the ÐΞVp2p Wire Protocol. In Ethereum, a light client is a client that downloads only block headers by default, and verifies only a small portion of what needs to be verified. Light clients do not interact directly with the blockchain; they instead use full nodes as intermediaries. Light clients rely on full nodes for many operations, from requesting the latest headers to asking for the balance of an account. As light clients need to send several requests to do simple operations, the overall network bandwidth needed is short term higher than that of a full node BUT only when the client is in use, compared to a full-node that is always online and participates entirely in all blockchain communications. Furthermore the amount of resources and storage needed is several orders of magnitude lower than that of a full node while achieving a very high level of security. Requiring only about 100 MB of storage and low computational power, a light node can run on a mobile device! But consider:

Work in Progress: Light Clients and LES are still experimental and therefore instability is to be expected.

Incentive running

a full node: Having the possibility of running a light client

there is no incentive to invest money in memory, SSD-disk-space, CPU

and bandwidth to run a full node. This light client trend will then

backfire on the entire network balance because more and more light

clients rely on a decreasingly number of full nodes and therefore

destabilizing the entire chain!

There are different

discussions ongoing how to reward participants that are running a

full node. For example paying a fee when a transaction is submitted

to a full-node similar to paying fees paid miners for block mining.

Light clients do not expose peer-to-peer communication and discovery (UDP) ports i.e. there is no incoming communication on these ports and protocols (because a light client is only up when used and relies on full-nodes i.e. cannot act and reply on its own)! But light clients rely on full nodes and LES as sub-protocol of the ÐΞVp2p Wire Protocol; therefore communication outgoing is on the same ports: peer-to-peer communication (TCP) port and a discovery (UDP) port, both on 30303.

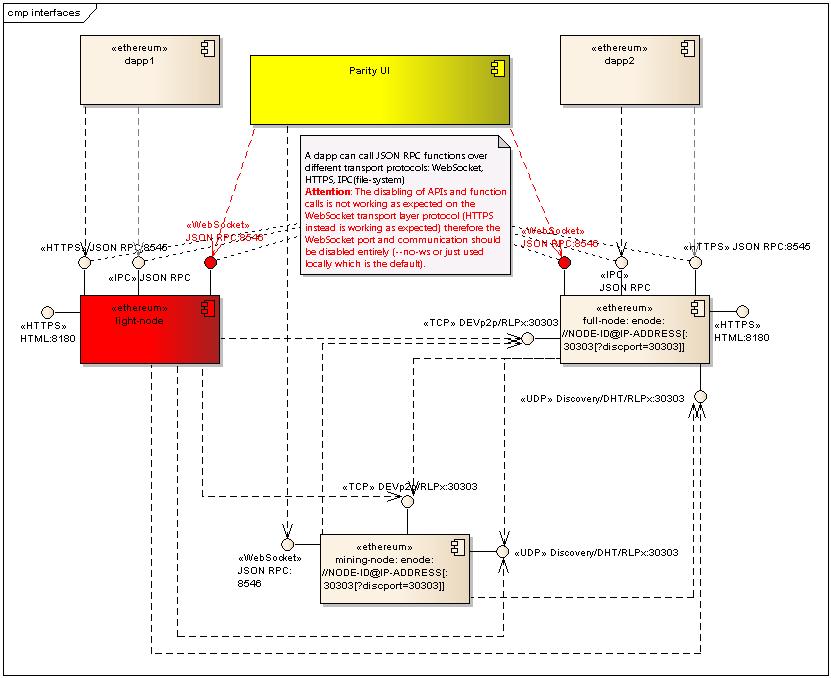

JSON RPC: Dapps connect to a node and communicate with smart contracts via the JSON RPC protocol. JSON RPC in the context of ethereum can run on top of different transport layer protocols namely: HTTPS( port 8545), WebSocket (port 8546) and IPC (Inter Process Communication) running locally on the file system. But consider:

Encryption: Some functions (even if not the official by default exposed JSON RPC functions) are sending credentials over the wire and therefore special care regarding transport protocol encryption should be taken when using these functions, even more if the node connecting to is not running locally.

Unlocked Accounts: If you have RPC on (ie, run geth/parity with --rpc) AND unlock the account AND have no firewall at all AND have --rpcaddr set to "0.0.0.0" then anyone can easily scan your node, retrieve all accounts: web3.eth.getAccounts([callback]) (Returns a list of accounts the node controls) and transfer money from all unlocked accounts using web3.eth.signTransaction(transactionObject, address [, callback]) and web3.eth.sendSignedTransaction(signedTransactionData [, callback]). In this way cybercriminal groups have managed in just Q2 2018 to steal a total 38,642 Ether, worth more than $20,500,000!

WebSocket: Security Issues: Disabling APIs – see [34]: The disabling of APIs and function calls is not working as expected on the WebSocket transport layer protocol (HTTPS instead is working as expected) therefore the WebSocket port and communication should be disabled entirely (--no-ws or just used locally which is the default) and instead the HTTPS protocol should be used and exposed! (at least for the current parity version 1.11.8)4

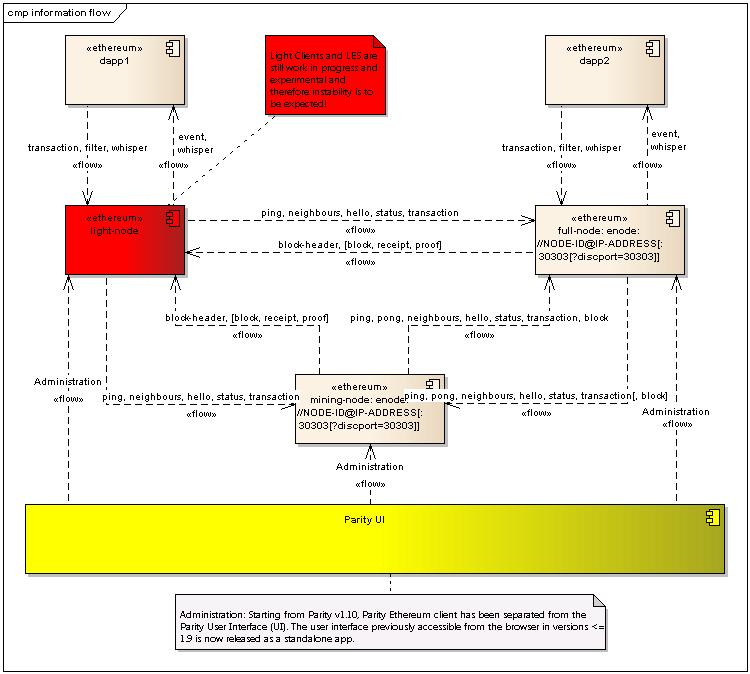

Illustration

3: Blockchain: Ethereum: Information Flow

Illustration

3: Blockchain: Ethereum: Information Flow

Full-nodes and mining-nodes are very similar and run on the same software e.g. parity or geth, with the main difference that mining-nodes produce blocks and require a lot computing power. For this reason on the full-node outgoing flow we put “[block]” in square brackets meaning that full-nodes, because they have all information, can provide block information to other nodes but full-nodes do not produce blocks and spread them into the network.

Light nodes only retrieve and store all block-headers. “[block, receipt, proof]” information is only retrieved on demand when needed and therefore put into square brackets.

“ping”, “pong” and “neighbor” information is exchanged as part of the node discovery. But light clients do not expose a discovery port i.e. cannot receive a “ping” and therefore do not send a “pong”.

“hello” and “status” is exchanged when two nodes connect to each other, exchange their status and start synchronizing the chain.

Illustration

4: Blockchain: Ethereum: Interfaces

Illustration

4: Blockchain: Ethereum: Interfaces

Based on the introduction and overview given in chapter 1, this chapter further details and defines terms used in the blockchain context.

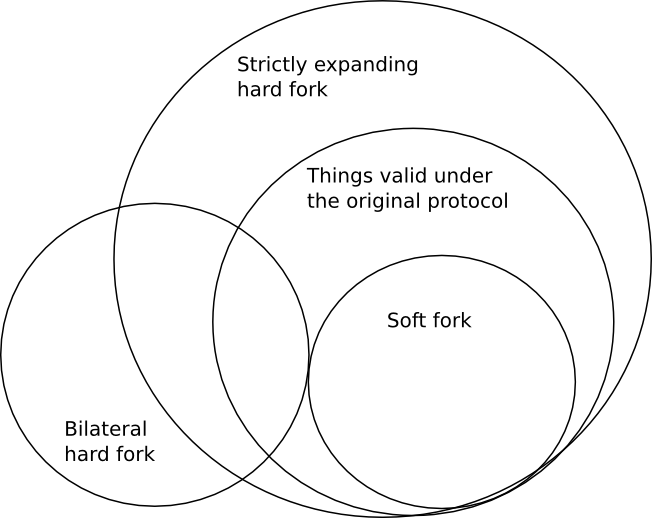

Besides temporary forks that happen when different miners create blocks at the same time or due to temporary network segregation see chapter 1, a hard- respective soft-fork is caused by a non-forward- respective forward-compatible change of the blockchain protocol rule-set in the underlying node software and/or configuration.

Illustration

5: Soft- versus Hard-Fork

Illustration

5: Soft- versus Hard-Fork

Soft fork: A soft fork is a forward compatible change and tightens the rule-set of the blockchain protocol. All transactions (and blocks) created using the new software will be accepted by all nodes regardless whether they are running the old or new software. Transactions (and blocks) that are created using the old software will be accepted by nodes running the old software but will only be accepted by nodes running the new software if they don’t violate the new tightened protocol rule-set.

Sequence Flow: Mining nodes running

the old software will accept and mine all transactions coming from

nodes running the old or new software. Mining nodes running the new

software will accept and mine all transactions from nodes running

the new software but will only accept transactions from nodes

running the old software if they do not violate the new tightened

protocol rule-set.

Resulting “old” blocks (blocks produced

by miners running the old software) are accepted by all nodes

running the old software but nodes running the new software will

only accept the “old” blocks if they do not contain transactions

that violate the the new tightened protocol rule-set. Resulting

“new” blocks are accepted by all nodes running the old or new

software.

Based on these facts all full-nodes and mining-nodes

running the old or new software can coexist and work on the same

blockchain until the first node running the old software creates a

transaction that violates the new tightened protocol rule-set and if

this transaction gets mined by a miner running the old software. At

this point the chain will fork and the block will only get added to

the chain of the nodes running the old software. From this

point/block on the two chains will run and extend in parallel. The

“old” chain (i.e.

the chain of the nodes running the old software) has (initially)

more hashing power i.e.

more mining nodes and will therefore grow faster and accordingly

ignore the new chain which is also valid but shorter. The new chain

will ignore the old chain because it is invalid i.e.

contains block(s) containing invalid transaction(s). But as soon as

the new chain attracts more mining power and grows longer than the

old chain, then all of a sudden all the old nodes will switch over

to the new chain because it is valid (i.e.

valid according to the old and new rules i.e.

forward compatible) and because it is longer and from this point on

there again only exists one chain for all nodes.

UASF versus MASF: Soft forks can either be user activated (UASF: User Activated Soft Fork) or miner activated (MASF: Miner Activated Soft Fork). UASF is when the majority of users respective their full-nodes upgrade their software. In this scenario the Miners have to upgrade their software in order to still earn coins, because there are only a few “old” nodes with little financial power left, creating few transactions and therefore paying few transaction fees to the “old” miners. MASF is when the majority of miners respective their nodes upgrade their software. In this scenario the Users respective their full nodes have to upgrade their software, otherwise they have to wait for a very long time until their transactions will get mined up to the point when their transactions will not get mined anymore i.e. when there are no miners left running the old software anymore.

New Features: At first sight it seems impossible to add new features and functionality using a soft fork because a soft fork tightens the existing blockchain protocol rule-set that is used to (create and) validate transactions and blocks. But the transactions protocol exposes various places where additional data and functionality can be introduced without violating the existing protocol rule-set. And this is how new features can be introduced using a soft-fork.

Bitcoin: The bitcoin blockchain in this context is a bit more advanced because blocks include a version field see [8] respective version vector see [9]. The version field supports the definition of thresholds how long (percentage of nodes running the new software) to support old and new version in parallel and when (percentage of nodes running the new software) to enforce a soft-fork and only accept “new” blocks. In addition the version vector supports the concept of several soft-forks running in parallel.

Hard Fork: Bilateral versus Strictly Expanding: A hard fork is a non-forward compatible change. Hard forks require “immediately” that all nodes upgrade their software because “old” nodes will not accept (certain) “new” transactions and blocks and therefore no soft migration is possible compared to a soft-fork but instead a “big-bang” upgrade is required.

Strictly Expanding Hard Fork: A strictly expanding hard fork is backward compatible and all transactions (and blocks) created using the old software will be accepted by all nodes regardless whether they are running the old or new software. Transactions (and blocks) that are created using the new software will be accepted by nodes running the new software but will only be accepted by nodes running the old software if they don’t violate the old tighter protocol rule-set.

Bilateral Hard Fork: A bilateral hard fork is neither backward- nor forward-compatible.

Whether a fork takes place or not is finally decided by the miners whether the majority of them update to the new software version or not. Because in the end only miners can create blocks and add them to the chain.

The DAO: A decentralized autonomous

organization and a form of investor directed venture capital fund,

written in Solidity and deployed as smart-contract on 30.04.2016

attracted in 20 days crowdsale more than 150 Mio US$ Ether. BUT the

software had a bug and a hacker transferred a third of the entire

fund to his account!

A soft-fork was planned to restrict the

protocol rule-set and reject all blocks containing a transaction to

or from the hacker account. BUT this software change could have

resulted in DoS attacks where hackers could flood the network with

computation intensive (and therefore ether-expensive) transactions

and as last transaction in a block they could have created a

transaction to or from the DAO hacker account which would then reject

the entire block and giving the hackers back their money they

“invested” before in the computation intensive transactions.

Therefore in the end a hard-fork was performed which splitted the

chain into two separate networks.

There exist dozens of articles about soft- and hard-forks see [10].

See chapter 1 A wallet stores the public- and private-keys of a participant, is connected to a full-node and hence meets the conditions to create and participate in transactions. Loosing a wallet means loosing all coins because the private-key to create and sign transactions and thus spending coins belonging to this account (public-key) is lost. And therefore a wallet respective its public and private keys should always be backed up!

When choosing a wallet, the owner must keep in mind who is supposed to have access to (a copy of) the private keys and thus has potentially access to the coins/asset. Just like with a bank, the user needs to trust the provider to keep the coins safe. Downloading a wallet from a wallet provider to a computer or phone does not automatically mean that the owner is the only one who has a copy of the private keys. For example with Coinbase, it is possible to install a wallet on a phone and to also have access to the same wallet through their website.

A backup of a wallet can come in different forms like:

A (encrypted) file like wallet.dat or wallet.bin which contains all the private keys.

A mnemonic sentence from which the root key can be generated, from which all the private keys can be recreated. Preferably these words could be remembered or written down and stored on other physical locations. For more information on mnemonics see next chapter 2.2.1 below.

A private key like: KxSRZnttMtVhe17SX5FhPqWpKAEgMT9T3R6Eferj3sx5frM6obqA

When the private keys and the backup are lost then that coins are lost forever. When using a webwallet, the private keys are managed by the provider. When owning coins, those trusted with managing the private keys should be carefully selected. An (encrypted) copy of the wallet should be kept in a trusted place. Preferably off-line. Some people 'write' their mnemonic sentence or private key on metal, because it is robust.

Alternatively a “paranoid” user can write the code to create a public-/private-key pair and the code to sign the transaction with the private-key himself see chapter 3.7.3 and 3.7.4.

Further interesting articles see [14].

Most content in this chapter is extracted from the article “Deterministic Wallet” see [15].

A deterministic wallet is a system respective algorithm of deriving keys from a single starting point known as a seed. The seed allows a user to easily back up and restore a wallet without needing any other information and can allow the creation of public addresses without the knowledge of the private key. Seeds are typically serialized into human-readable words in a Mnemonic phrase. In other words instead of memorizing all the public- / private-key pairs contained in the wallet the user only needs to memorize the mnemonic sentence because from this mnemonic all public- / private-key pairs can be derived (calculated).

Deterministic wallets can generate an unlimited number of addresses on the fly and as the addresses are generated in a known fashion rather than randomly some clients can be used on multiple devices without the risk of losing funds. Users can conveniently create a single backup of the seed in a human readable format that will last the life of the wallet, without the worry of this backup becoming stale.

Certain types of deterministic wallet (BIP0032, Armory, Coinkite and Coinb.in ) additionally allow for the complete separation of private and public key creation for greater security and convenience. In this model a server can be set up to only know the Master Public Key of a particular deterministic wallet. This allows the server to create as many public keys as is necessary for receiving funds, but a compromise of the MPK will not allow an attacker to spend from the wallet.

Mnemonic (dt “Eselsbrücke”): See [16]: Pronounced “ne-manik,” in its purest form a mnemonic is a pattern of letters, words, or associations which allows the user to easily remember information, and has been used by humans for thousands of years. In other words, it can be a very useful tool to help us memorize important information we need to remember.

Further interesting articles see [14].

Ethereum is an open-source, blockchain-based distributed computing platform and operating system featuring smart contract (scripting) functionality.

Ether is the cryptocurrency of the ethereum

blockchain. Ether can be transferred between accounts and used to

compensate participant mining nodes for computations performed.

Ethereum provides a decentralized Turing-complete virtual machine,

the Ethereum Virtual Machine (EVM), which can execute smart

contracts (scripts) on a network of nodes. "Gas",

an internal transaction fee/pricing mechanism, is used to mitigate

spam and allocate resources on the network.

Ethereum has a

metric system of denominations used as units of ether. The

smallest denomination aka base unit of ether is called Wei.

Below is a list of the named denominations and their value in Wei.

ether = 1e18 wei

milliether (finney) = 1e15 wei

microether (szabo) = 1e12 wei

gwei (shannon) = 1e9 wei

mwei (lovelace) = 1e6 wei

kwei (babbage) = 1e3 wei

Further detailed information about ethereum can be found in the ethereum white paper see [5] and yellow paper see [6] which are the basis for ethereum.

Each network version gets a separate name (and id). Here is an overview – see as well [12].

Olympic (0) is also

regularly referred to as Ethereum 0.9; it launched early 2015

and was the first public Testnet. Deprecated in mid 2015 and

replaced by Morden.

Frontier (1) the

official 1.0 release was launched as public main network

in the summer of 2015. Forked to Homestead in early 2016.

Morden (2) was the

Frontier-equivalent testnet; it launched with Frontier and

basically replaced Olympic. Deprecated in late 2016 and

replaced by Ropsten.

Homestead (1) was the first major upgrade (1.1) of the Frontier network in March 2016. It did not replace Frontier but upgraded it.

Ropsten (3) is a new Homestead-equivalent testnet launched in late 2016 due to multiple issues in the old testnet; it finally replaced Morden. Ropsten was attacked in February 2016 and declared dead. But with great effort it has been revived on March 2017.

PoW

Supported by geth and parity

Best reproduces the current production environment

Chaindata size 15 GB - Apr 2018

Ether can be mined. Or requested from a faucet:

Commands:

geth --testnet or geth --networkid 3

parity --chain ropsten

Kovan (42) is the first proof-of-authority (PoA) testnet issued by Ethcore, Melonport, and Digix after the Ropsten attacks.

PoA (Immune to spam attacks)

Supported by parity only

Chaindata size 13 GB - Apr 2018

Ether can't be mined. It has to be requested from the faucet: https://github.com/kovan-testnet/faucet

Command: parity --chain kovan

Rinkeby, another PoA testnet is currently being drafted.

PoA (Immune to spam attacks)

Supported by geth only

Chaindata size 6 GB - Apr 2018

Ether can't be mined. It has to be requested from a faucet: https://faucet.rinkeby.io/

Command: geth --rinkeby or geth --networkid 4

The current protocol version is Homestead and Ropsten is is the public Homestead equivalent testnet.

Despite the differences in name, Olympic, Morden and Ropsten have the network ids 0, 2 and 3. Frontier, Homestead are the main network with id 1. You can run your own chain by specifying a network id other than 0, 1, 2, or 3.

Most content in this chapter is extracted from the article “Introduction to Smart Contracts” see [17].

The Ethereum Virtual Machine or EVM is the runtime environment for smart contracts in Ethereum. It is not only sandboxed but actually completely isolated, which means that code running inside the EVM has no access to network, filesystem or other processes. Smart contracts even have limited access to other smart contracts.

There are two kinds of accounts in Ethereum which share the same address space: External accounts that are controlled by public-private key pairs (i.e. humans) and contract accounts which are controlled by the code stored together with the account.

The address of an external account is determined from the public key while the address of a contract is determined at the time the contract is created respective deployed (it is derived from the creator address and the number of transactions sent from that address, the so-called “nonce”).

Regardless of whether or not the account stores code, the two types are treated equally by the EVM.

Every account has a persistent key-value store mapping 256-bit words to 256-bit words called storage.

Furthermore, every account has a balance in Ether (in “Wei” to be exact) which can be modified by sending transactions that include Ether.

A transaction is a message that is sent from one account to another account (which might be the same or the special zero-account, see below). It can include binary data (its payload) and Ether.

If the target account contains code, that code is executed and the payload is provided as input data.

If the target account is the zero-account (the account with the address 0), the transaction creates a new contract. As already mentioned, the address of that contract is not the zero address but an address derived from the sender and its number of transactions sent (the “nonce”). The payload of such a contract creation transaction is taken to be EVM bytecode and executed. The output of this execution is permanently stored as the code of the contract. This means that in order to create a contract, you do not send the actual code of the contract, but in fact code that returns that code.

Upon creation, each transaction is charged with a certain amount of gas, whose purpose is to limit the amount of work that is needed to execute the transaction and to pay for this execution. While the EVM executes the transaction, the gas is gradually depleted according to specific rules.

The gas price is a value set by the creator of the transaction, who has to pay gas_price * gas up front from the sending account. If some gas is left after the execution, it is refunded in the same way.

If the gas is used up at any point (i.e. it is negative), an out-of-gas exception is triggered, which reverts all modifications made to the state in the current call frame.

With Bitcoin miners prioritise transaction with the highest mining fees. The same is true of Ethereum where miners are free to ignore transactions whose gas price limit is too low.

The gas price per transaction or contract is set up to deal with the Turing Complete nature of Ethereum and its EVM (Ethereum Virtual Machine Code) – the idea being to limit infinite loops. So for example 10 Szabo, or 0.00001 Ether or 1 Gas can execute a line of code or some command. If there is not enough Ether in the account to perform the transaction or message then it is considered invalid. The idea is to stop denial of service attacks from infinite loops, encourage efficiency in the code – and to make an attacker pay for the resources they use, from bandwidth through to CPU calculations through to storage.

Each account has a persistent memory area which is called storage. Storage is a key-value store that maps 256-bit words to 256-bit words. It is not possible to enumerate storage from within a contract and it is comparatively costly to read and even more so, to modify storage. A contract can neither read nor write to any storage apart from its own.

The second memory area is called memory, of which a contract obtains a freshly cleared instance for each message call. Memory is linear and can be addressed at byte level, but reads are limited to a width of 256 bits, while writes can be either 8 bits or 256 bits wide. Memory is expanded by a word (256-bit), when accessing (either reading or writing) a previously untouched memory word (ie. any offset within a word). At the time of expansion, the cost in gas must be paid. Memory is more costly the larger it grows (it scales quadratically).

The EVM is not a register machine but a stack machine, so all computations are performed on an area called the stack. It has a maximum size of 1024 elements and contains words of 256 bits. Access to the stack is limited to the top end in the following way: It is possible to copy one of the topmost 16 elements to the top of the stack or swap the topmost element with one of the 16 elements below it. All other operations take the topmost two (or one, or more, depending on the operation) elements from the stack and push the result onto the stack. Of course it is possible to move stack elements to storage or memory, but it is not possible to just access arbitrary elements deeper in the stack without first removing the top of the stack.

The instruction set of the EVM is kept minimal in order to avoid incorrect implementations which could cause consensus problems. All instructions operate on the basic data type, 256-bit words. The usual arithmetic, bit, logical and comparison operations are present. Conditional and unconditional jumps are possible. Furthermore, contracts can access relevant properties of the current block like its number and timestamp.

Contracts can call other contracts or send Ether to non-contract accounts by the means of message calls. Message calls are similar to transactions, in that they have a source, a target, data payload, Ether, gas and return data. In fact, every transaction consists of a top-level message call which in turn can create further message calls.

A contract can decide how much of its remaining gas should be sent with the inner message call and how much it wants to retain. If an out-of-gas exception happens in the inner call (or any other exception), this will be signalled by an error value put onto the stack. In this case, only the gas sent together with the call is used up. In Solidity, the calling contract causes a manual exception by default in such situations, so that exceptions “bubble up” the call stack.

As already said, the called contract (which can be the same as the caller) will receive a freshly cleared instance of memory and has access to the call payload - which will be provided in a separate area called the calldata. After it has finished execution, it can return data which will be stored at a location in the caller’s memory preallocated by the caller.

Calls are limited to a depth of 1024, which means that for more complex operations, loops should be preferred over recursive calls.

There exists a special variant of a message call, named delegatecall which is identical to a message call apart from the fact that the code at the target address is executed in the context of the calling contract and msg.sender and msg.value do not change their values.

This means that a contract can dynamically load code from a different address at runtime. Storage, current address and balance still refer to the calling contract, only the code is taken from the called address.

This makes it possible to implement the “library” feature in Solidity: Reusable library code that can be applied to a contract’s storage, e.g. in order to implement a complex data structure.

It is possible to store data in a specially indexed data structure that maps all the way up to the block level. This feature called logs is used by Solidity in order to implement events. Contracts cannot access log data after it has been created, but they can be efficiently accessed from outside the blockchain. Since some part of the log data is stored in bloom filters, it is possible to search for this data in an efficient and cryptographically secure way, so network peers that do not download the whole blockchain (“light clients”) can still find these logs.

Contracts can even create other contracts using a special opcode (i.e. they do not simply call the zero address). The only difference between these create calls and normal message calls is that the payload data is executed and the result stored as code and the caller / creator receives the address of the new contract on the stack.

The only possibility that code is removed from the blockchain is when a contract at that address performs the selfdestruct operation. The remaining Ether stored at that address is sent to a designated target and then the storage and code is removed from the state.

A node is a computer, that is part of the ethereum network. This node either stores a part (light client) or a full (full node) copy of the blockchain and updates the blockchain continuously. Furthermore there exist mining-nodes that confirm transactions aka perform mining.

See [7]: What does the client software do?

It downloads the whole blockchain onto your system on a regular basis, keeping the tab on the whole network. It verifies all transactions and contracts on the blockchain. If you are building your own contracts, it broadcasts them to the network so that they are included in the next block and confirmed by the miners. Client software can also do the mining but these days you may need a super-computer do make any ether this way.

Both geth and parity require 2-4GB of RAM and 50-100GB of hard drive space for storing the blockchain. And both require extensive memory so ~4GB swap needs to be set up.

There exist different ethereum clients (listed here descending, according to popularity): go-ethereum (geth), Parity, cpp-ethereum, pyethapp, ethereumjs-lib, Ethereum(J), ruby-ethereum, ethereumH. But there exists no resilient comparison between them. Some people suggest parity because it is popular, flexible and seems to be a little bit faster than the others. What I’ve seen so far is that the test network support is different (especially regarding PoA) – see chapter 3.1.

In this document we will focus on the parity client/node. Most content in this chapter is extracted from the parity wiki see [18].

Summary: Parity is an Ethereum client, written from the ground-up for correctness-verifiability, modularisation, low-footprint and high-performance. To this end it utilizes the Rust language, a hybrid imperative/OO/functional language with an emphasis on efficiency.

You can download and run the binaries, you can build the binaries from source, you can run parity in a docker container or you can run parity in the cloud e.g. Microsoft Azure.

Precondition is that you’ve docker installed – see chapter “Installation” and “Network” in [3].

Lately the parity docker image support has been improved greatly and different base images are available out of the box see [20].

But in case you want to build it on docker yourself follow the instruction in this chapter. All details respective output and issues encountered can be found in appendix C. Listed here is the final resulting dockerfile:

# Get an image as basis for building the app

FROM ubuntu AS builder

# Set the working directory to /app

WORKDIR /app

# 1. Download and install all packages required to build parity

RUN apt-get update -y && apt-get install -y build-essential && apt-get install -y libudev-dev && apt-get install -y git && apt-get install -y curl && curl https://sh.rustup.rs -sSf > rustup.sh && chmod +x rustup.sh && ./rustup.sh -y && git clone https://github.com/paritytech/parity

# (2. Optionally download and install the test packages required to run the parity tests)

#RUN cd parity && git submodule init && git submodule update

# 3. Finally run the compilation and build the parity node

# There is an issue running “source $HOME/.cargo/env”, replacing with “. $HOME/.cargo/env”

RUN cd parity && . $HOME/.cargo/env && cargo build

# Run app.py when the container launches

#CMD ["python", "app.py"]

docker build -t becke-ch--parity--s0-v1:s0-0-v1-0 .

docker run --net docker--s0-0-v1-0 --ip 10.0.0.10 -it becke-ch--parity--s0-v1

Full Node: Running a full node with the stadard configuration for the Ethereum Mainnet requires a lot of computer ressources. The blockchain download and validation process is particularly heavy on CPU and disk IO. It is therefore recommended to run a full node on a computer with multi-core CPU, 4GB RAM and a SSD drive with at least 60GB free space. Internet connection can also be a limiting factor. A decent DSL connection is required.

Light Node: Running a light node using the flag --light does not require to download and perform validation of the whole blockchain. A light node relies on full node peers to receive block headers and verify transactions. It is therefore far less resource demanding than a full node. For additional information see as well chapter 1.1.2.1.

--warp: State snapshotting, or warp-sync, allows for an extremely fast “synchronization” that skips almost all of the block processing, simply injecting the appropriate data directly into the database. Parity 1.6 or 1.7, --warp does nothing as it is enabled by default.

parity --chain ropsten : Connect to the Ropsten testnet.

parity --chain kovan : Connect to the Kovan testnet.

parity --bootnodes enode://YOUR_BOOT_NODE_ID_HERE@127.0.0.1:30303 : You can override the normal boot nodes with --bootnodes, i.e., you might run a local bootnode and sync from that. Further information on bootnodes see chapter 1.1.2 and regarding configuration see chapter 3.3.4, 3.3.4.1 and 3.3.4.2.

parity

--reserved-peers /path/to/reserved.txt : Instead of using

a bootnode to discover and connect to peers maintain a permanent

connection to own set of nodes, you can wire them with the

--reserved-peers feature.

Simply place all node addresses you want to connect to (enode://...,

one per line) into a text file, e.g., reserved.txt.

Using

a list of reserved peers hinders the dynamics of of a network to grow

(and shrink) because maintaining and distributing such a file between

the nodes is painful and against a decentralized approach. And there

exist better ways and technologies to restrict nodes respective IP

ranges.

parity --chain /path/to/chain-config.json --config /path/to/node-config.toml --base-path /path/to/base-data-storage/ --jsonrpc-interface [IP] --jsonrpc-apis [APIS]: Start parity on a private/consortium chain defined in the “--chain” configuration, with a customized node configuration according to “--config”, with a dedicated base data storage location “--base-path” and accessible via JSON-RPC over HTTP on a (fix) IP address “--jsonrpc-interface [IP]”. For example see PoA chain in chapter 3.3.6.1.

--chain:

Create a directory per chain, whereat the directory name contains

the chain name, as specified in the chain configuration file in the

parameter “"name"”

see chapter 3.3.5.1:

“--chain

/data/becke-ch--parity-chain--sX-vY/chain-config.json”.

$ mkdir -p /data/becke-ch--parity-chain--s0-v1

$ touch /data/becke-ch--parity-chain--s0-v1/chain-config.json

--config

&

--base-path:

Create a directory per node, whereat the directory name contains the

node- respective host name: “becke-ch--parity-node--sX-vY”:

starting with the (authority) organization name e.g. “becke-ch”

and where “X” is an integer differentiating the various nodes

(their scope/purpose) and “Y” is an integer that is incremented

in case a new major version of the node is required. Below this

directory create two sub-directories: “configuration”

and “data”. The

“configuration”

directory contains the node configuration file according to chapter

3.3.4 and the “data”

directory stores the node base- & chain-data.

“--config

/data/becke-ch--parity-node--sX-vY/configuration/node-config.toml”

“--base-path

/data/becke-ch--parity-node--sX-vY/data/”

$ mkdir -p /data/becke-ch--parity-node--s0-v1/data

$ mkdir -p /data/becke-ch--parity-node--s0-v1/configuration

$ touch /data/becke-ch--parity-node--s0-v1/configuration/node-config.toml

--jsonrpc-interface [IP]: Varying see chapter 3.3.4 and 3.3.4.2.

--jsonrpc-apis [APIS]: Optional/exceptional see chapter 3.3.4, 3.3.4.1 and 3.3.4.2.

“Minimal”: Minimal according to my point of view in matters of clean separation and reasonable functionality with minimal security exposure:

$ parity --chain /data/becke-ch--parity-chain--s0-v1/chain-config.json --config /data/becke-ch--parity-node--s0-v1/configuration/node-config.toml --base-path /data/becke-ch--parity-node--s0-v1/data/ --jsonrpc-interface 10.0.0.10

“Extended”:

$ parity --chain /data/becke-ch--parity-chain--s0-v1/chain-config.json --config /data/becke-ch--parity-node--s0-v1/configuration/node-config.toml --base-path /data/becke-ch--parity-node--s0-v1/data/ --jsonrpc-interface 10.0.0.10 --jsonrpc-apis web3,eth,pubsub,net,shh,shh_pubsub

Follow the installation in chapter 3.3.1.1 and setup see chapter above.

Because docker in some environments (e.g. Azure Container Instance (ACI)) has issues mounting more than one directory, link the chain configuration file into the node configuration directory (Attention symbolic links are not working in docker – use hard link instead!):

$ ln /data/becke-ch--parity-chain--s0-v1/chain-config.json /data/becke-ch--parity-node--s0-v1/configuration/

Run docker, mount the node (and chain)

directory and pass the chain-configuration-, node-configuration- and

base-data-storage-path as startup parameters: “--chain”,

“--config” and

“--base-path”

Do

NOT map the parity ports using “-p”

otherwise you will get conflicts with several docker nodes running

on your host! Instead see chapter “Network” in [3] run

the container on an existing bridge (and if visibility is required

outside of the host, expose this bridge via a physical NIC

externally).

Run the docker containers on fix IP addresses to make sure that they will find each other even after a restart. It is mandatory at least for the boot-nodes to run on fix IP addresses otherwise the other nodes in the network cannot startup (in case the boot-node is hard-coded / configured)!

“Minimal”:

$ docker run -ti --net docker--s0-0-v1-0 --ip 10.0.0.10 -v /data/becke-ch--parity-node--s0-v1/:/data/becke-ch--parity-node--s0-v1/ parity/parity:v1.11.8 --chain /data/becke-ch--parity-node--s0-v1/configuration/chain-config.json --config /data/becke-ch--parity-node--s0-v1/configuration/node-config.toml --base-path /data/becke-ch--parity-node--s0-v1/data/ --jsonrpc-interface 10.0.0.10

“Extended”:

$ docker run -ti --net docker--s0-0-v1-0 --ip 10.0.0.10 -v /data/becke-ch--parity-node--s0-v1/:/data/becke-ch--parity-node--s0-v1/ parity/parity:v1.11.8 --chain /data/becke-ch--parity-node--s0-v1/configuration/chain-config.json --config /data/becke-ch--parity-node--s0-v1/configuration/node-config.toml --base-path /data/becke-ch--parity-node--s0-v1/data/ --jsonrpc-interface 10.0.0.10 --jsonrpc-apis web3,eth,pubsub,net,shh,shh_pubsub

Based on chapter 1.1.2.2 parity exposes the following JSON RPC APIs by default – see below and next to them I’ve listed the web3js (see chapter 3.7.7) packages they map to:

web3: Most functions correspond to functions with the same name in “web3.utils” package.

eth: Most functions correspond to functions with the same name in “web3.eth” package. The web3.eth package allows you to interact with an Ethereum blockchain and Ethereum smart contracts.

pubsub: Most functions correspond to functions with the same name in “web3.eth.subscribe” package. The web3.eth.subscribe function lets you subscribe to specific events in the blockchain.

net: Most functions correspond to functions with the same name in “web3.*.net” package. The web3.*.net package allows you to interact with the Ethereum nodes network properties.

parity: N/A web3js. These functions are parity specific and have no web3js counterpart package.

parity_pubsub: N/A web3js. These functions are parity specific and have no web3js counterpart package.

traces: N/A web3js. These functions have no web3js counterpart package. The trace module is for getting a deeper insight into transaction processing. It includes two sets of calls; the transaction trace filtering API and the ad-hoc tracing API.

shh: Most functions correspond to functions with the same name in “web3.shh” package. The web3.shh package allows you to interact with the whisper protocol for broadcasting.

rpc: N/A. No documentation available at all?!

And the following JSON RPC APIs are NOT exposed by default:

personal: Most functions correspond to functions with the same name in “web3.eth.personal” package. The web3.eth.personal package allows you to interact with the Ethereum node’s accounts. Many of these functions send sensitive information, like password. Never call these functions over a unsecured Websocket or HTTP provider, as your password will be sent in plain text!

parity_accounts: N/A web3js. These functions are parity specific and have no web3js counterpart package.

parity_set: N/A web3js. These functions are parity specific and have no web3js counterpart package.

signer: N/A. These functions have no web3js counterpart package.

secretstore: N/A. These functions have no web3js counterpart package. Is available in default Parity client. Is considered unsafe and must be enabled separately! Contains dangerous methods and must be enabled with caution!

Finally I suggest to enable the following minimal set of APIs (in the configuration file – see chapter below):

apis = ["web3", "eth", "pubsub", "net", "shh", "shh_pubsub"]

The following (proprietary and/or not documented) APIs I’ve removed from the minimal default: parity, parity_pubsub, traces and rpc!

As shown in chapter 3.3.2 parity can be started without any specific node configuration. In this case the default configuration is used which matches the configuration of the other nodes in the public blockchain.

Configuration can either be provided as command line arguments or in a configuration file. Should the command line arguments and the config file disagree about a setting, the CLI takes precedence. Therefore the config file should contain the default, minimal and most restrictive configuration settings relevant for all participating nodes and (temporary) exception and dynamic behaviors should be provided via command line!

The config file: “--config /data/becke-ch--parity-node--sX-vY/configuration/node-config.toml” should contain the following information:

apis

=

[...]: By default only the minimal set of APIs as

listed in the previous chapter should be exposed.

Attention

due to a security issue

in the parity WebSocket implementation, the disabling of WebSocket

APIs

is not working as

expected

– see chapter 1.1.2.2. In other words you can configure it but it

will get ignored under

certain circumstances and

therefore the WebSocket interface should

not be exposed at all

– see

next chapter below!:

[rpc]

apis = ["web3", "eth", "pubsub", "net", "shh", "shh_pubsub"]

[websockets]

apis = ["web3", "eth", "pubsub", "net", "shh", "shh_pubsub"]

bootnodes

=

[“enode://YOUR_BOOT_NODE_ID_HERE@IP_ADDRESS:30303”]:

The configuration file should contain the initial boot node(s) of the

chain founder(s). For (temporary) exception see next chapter. For

additional remarks regarding boot nodes see chapter 1.1.2.

Using

a list of reserved peers instead hinders the dynamics of of a network

to grow (and shrink) because maintaining and distributing such a file

between the nodes is painful and against a decentralized approach.

And there exist better ways and technologies to restrict nodes

respective IP ranges.

[network]

bootnodes = ["enode://2806efe7adb55d001d9ffabefab6a1711540672ae84308133940c366dc08aaf3080839e14da17e3d694156f54c4cf33397fe00ac6aa377454433dc81aeb906f3@10.0.0.10:30303"]

The resulting configuration looks as follows:

[network]

bootnodes = ["enode://2806efe7adb55d001d9ffabefab6a1711540672ae84308133940c366dc08aaf3080839e14da17e3d694156f54c4cf33397fe00ac6aa377454433dc81aeb906f3@10.0.0.10:30303"]

[rpc]

apis = ["web3", "eth", "pubsub", "net", "shh", "shh_pubsub"]

[websockets]

apis = ["web3", "eth", "pubsub", "net", "shh", "shh_pubsub"]

--bootnodes enode://YOUR_BOOT_NODE_ID_HERE@IP_ADDRESS:30303: In case a bootnode changes (dynamic IP address or downtime) this information should be provided as startup parameter overriding the default configuration in the config file see previous chapter. For additional remarks regarding boot nodes see chapter 1.1.2.

--bootnodes enode://2806efe7adb55d001d9ffabefab6a1711540672ae84308133940c366dc08aaf3080839e14da17e3d694156f54c4cf33397fe00ac6aa377454433dc81aeb906f3@10.0.0.10:30303

--jsonrpc-interface=[IP]:

To access the node via HTTP from

externally (respective

from the host where

the docker container node

is running)

the IP address where the interface is

listening respective assigned to should be allowed. Because

the IP address of the node respective interface might change in case

of dynamic IPs this information

should be provided as CLI parameter (in

the sample below replace

“10.0.0.10”

with the IP address where your

node is running respective the interface is

listening!).

--ws-interface=[IP]:

Because there exists a security issue with the parity

WebSocket

implementation

and the disabling of exposed APIs, see previous

chapter above and

chapter

1.1.2.2, the

IP address where the interface is listening respective assigned to

should NOT

be

allowed! Instead

only the “--jsonrpc-interface=[IP]”

should be allowed!

For HTTP and WebSocket the

default interface is “local”.

--jsonrpc-interface 10.0.0.10

--jsonrpc-apis

[APIS]: In exceptional cases when additional

administration APIs (for a list see chapter 3.3.3) are required they

can temporarily (for a short time) be activated via command line and

they will then oversteer the configuration in the config file see

previous chapter.

--ws-apis

[APIS]:

As already mentioned above the disabling of WebSocket APIs is not

working as

expected

and

therefore should not be used (currently)!

--jsonrpc-apis web3,eth,pubsub,net,shh,shh_pubsub

By default, when simply running parity, Parity Ethereum will connect to the official public Ethereum network.

In order to run a chain different to the official public Ethereum one, Parity has to run with the --chain option e.g. “parity --chain ropsten”:

mainnet (default) main Ethereum network

kovan or testnet the fast Ethereum test network

ropsten the old Ethereum test network

classic Ethereum Classic network

classic-testnet original Morden testnet and current Ethereum Classic testnet

expanse Expanse network

dev a Private development chain to be used locally, submitted transactions are inserted into blocks instantly without the need to mine

Musicoin Musicoin network

ellaism Ellaism network

For more information on networks see chapter 3.1.

Parity supports private chains and private network configurations via Chain specification file provided with --chain. In addition to the usual Proof of Work Chains, Parity also includes Proof of Authority Chains which do not require mining:

JSON chain spec format:

{

"name": "becke-ch--parity-chain--sX-vY",

"engine": {

"ENGINE_NAME": {

"params": {

ENGINE_PARAMETERS

}

}

},

"genesis": {

"seal": {

ENGINE_SPECIFIC_GENESIS_SEAL

},

"difficulty": "0x20000",

"gasLimit": "0x2fefd8"

},

"params": {

"networkID" : "0xYOUR_NETWORK_ID",

"maximumExtraDataSize": "0x20",

"minGasLimit": "0x1388"

},

"accounts": {

GENESIS_ACCOUNTS

}

}

"name" field contains any name used to identify the chain. It is used as a folder name for database files. (avoid using spaces in the name! If it contains spaces, then use parameter "dataDir" without spaces!): “becke-ch--parity-chain--sX-vY”: starting with the (authority) organization name and where “X” is an integer differentiating the various private/consortium chains (their scope/purpose) and “Y” is an integer that is incremented in case a new major version of the chain is required

"engine" field describes the consensus engine used for a particular chain. See: [21] – for implementation of PoA see chapter 3.3.6.1.

"genesis"

contains the genesis block (first block in the chain) header

information.

Every time we launch Ethereum, we actually

recreate this genesis block from scratch. Syncing the blockchain

with peers only begins at block 1.

"seal" is consensus engine specific and is further described in Consensus Engines. See: [21] – for implementation of PoA see chapter 3.3.6.1.

"difficulty" is the difficulty of the genesis block. This parameter is required for any type of chain but can be of arbitrary value if a PoA engine is used. Set this value low so you don’t have to wait too long for mining blocks.

"gasLimit" is the gas limit of the genesis block. It affects the initial gas limit adjustment. Set this value high to avoid being limited when testing.

"params" contains general chain parameters:

"networkID" : DevP2P supports multiple networks, and ID is used to uniquely identify each one which is used to connect to correct peers and to prevent transaction replay across chains. Make sure that the ID does not collide with the ID of the existing networks see chapter 3.3.5.

"maximumExtraDataSize": determines how much extra data the block issuer can place in the block header.

"minGasLimit": gas limit can adjust across blocks, this parameter determines the absolute minimum it can reach.

Optional:

"gasLimitBoundDivisor": U256 - How much the block gas limit can change between blocks. Miners can vote to bring the block gas limit up or down (via the flag --gas-floor-target), the new gas limit is callculated according to the formula: current_gas_limit*(1 ± 1/gasLimitBoundDivisor).

"validateChainIdTransition": Optional, will be included for block 0 by default - Block before which any chain_id in the signature of a replay-protected transaction is accepted. After this transition block, the transactions’ chain_id must match with the spec chain_id to be considered valid.

"eip155Transition": See EIP [32].

"eip140Transition": See EIP [32].

"eip211Transition": See EIP [32].

"eip214Transition": See EIP [32].

"eip658Transition": See EIP [32].

"accounts" contains optional contents of the genesis block, such as simple accounts with balances or contracts. Parity does not include the standard Ethereum builtin contracts by default. These are necessary when writing new contracts in Solidity since compiled Solidity often refers to them. To make the chain behave like the public Ethereum chain the 4 contracts need to be included in the spec file, as shown in the example below:

"accounts": {

"0x0000000000000000000000000000000000000001": { "balance": "1", "builtin": { "name": "ecrecover", "pricing": { "linear": { "base": 3000, "word": 0 } } } },

"0x0000000000000000000000000000000000000002": { "balance": "1", "builtin": { "name": "sha256", "pricing": { "linear": { "base": 60, "word": 12 } } } },

"0x0000000000000000000000000000000000000003": { "balance": "1", "builtin": { "name": "ripemd160", "pricing": { "linear": { "base": 600, "word": 120 } } } },

"0x0000000000000000000000000000000000000004": { "balance": "1", "builtin": { "name": "identity", "pricing": { "linear": { "base": 15, "word": 3 } } } }

}

Other types of accounts that can be specified:

simple accounts with some balance "0x...": { "balance": "100000000000" }

full account state "0x...": { "balance": "100000000000", "nonce": "0", "code": "0x...", "storage": { "0": "0x...", ... } }

contract constructor, similar to sending a transaction with bytecode "0x...": { "balance": "100000000000", "constructor": "0x..." }. The constructor bytecode is executed when the genesis is created and the code returned by the “constructor” is stored in the genesis state.

Proof of Authority does not depend on nodes solving arbitrarily difficult mathematical problems, but instead uses a set of “authorities” - nodes that are explicitly allowed to create new blocks and secure the blockchain. The block has to be signed off by the majority of authorities, in which case it becomes a part of the permanent record. This makes it easier to maintain a private chain and keep the block issuers accountable.

For consortium setting there are no disadvantages of PoA network as compared to PoW. It is more secure (since an attacker with unwanted connection or hacked authority can not overwhelm a network potentially reverting all transactions), less computationally intensive (mining with difficulty which provides security requires lots of computation), more performant (Aura consensus provides lower transaction acceptance latency) and more predictable (blocks are issued at steady time intervals). PoA deployments are used by the enterprise and by the public (e.g. popular Kovan test network).

The two available algorithms are the Aura and Tendermint. Because Tendermint is still experimental we will focus on Aura (authorityRound) in the following sub chapters.

Pick real world entities that should control the network, so called authorities. E.g different organizations that want to collaborate without an intermediary. Optionally establish the authority as a new organization and give it a DNS name e.g. “becke.ch” (respective “becke-ch” depending on the context).

Nominate a promoter respective administrator of the authority round / chain, responsible for specifying and distributing the configurations and running an (initial) boot node the others can connect to.

Each authority should create two accounts – an authority account and a user account. The authority account is used by the node itself to issue blocks. To issue blocks the node requires the password (file) for this authority account AND because this is a security issue (from my point of view) and as well for separation of concerns, we use a separate user account that has the ethers required to send transactions. The accounts can be created by using a wallet or simply running the code shown in chapter 3.7.3 or reusing an existing account (public- / private-key pair).

Each authority sends its authority account (public key) and user account (public key) to the authority round administrator.

The administrator creates the configuration files:

Prepares the network configuration as shown below and stores it in a file:

$ vi /data/becke-ch--parity-chain--s0-v1/chain-config.json

{

"name": "becke-ch--parity-chain--s0-v1",

"engine": {

"authorityRound": {

"params": {

"stepDuration": "5",

"validators" : {

"list": ["0xDfBad97d7AD160804762c36C255082a705D985E8","0x1C8592BFB4f2A842fC2eFBEB54463638739c2B93","0x169b1feCEb85044b36d6B7A3f2076Af6b372843A"]

}

}

}

},

"genesis": {

"seal": {

"authorityRound": {

"step": "0x0",

"signature": "0x0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000"

}

},

"difficulty": "0x20000",

"gasLimit": "0x2fefd8"

},

"params": {

"networkID" : "0x777",

"maximumExtraDataSize": "0x20",

"minGasLimit": "0x1388",

"gasLimitBoundDivisor": "0x400",

"eip155Transition": 0,

"validateChainIdTransition": 0,

"eip140Transition": 0,

"eip211Transition": 0,

"eip214Transition": 0,

"eip658Transition": 0

},

"accounts": {

"0x0000000000000000000000000000000000000001": { "balance": "1", "builtin": { "name": "ecrecover", "pricing": { "linear": { "base": 3000, "word": 0 } } } },

"0x0000000000000000000000000000000000000002": { "balance": "1", "builtin": { "name": "sha256", "pricing": { "linear": { "base": 60, "word": 12 } } } },

"0x0000000000000000000000000000000000000003": { "balance": "1", "builtin": { "name": "ripemd160", "pricing": { "linear": { "base": 600, "word": 120 } } } },

"0x0000000000000000000000000000000000000004": { "balance": "1", "builtin": { "name": "identity", "pricing": { "linear": { "base": 15, "word": 3 } } } },

"0x26D9E695Bd95fD6cd9e2C08Fc3450FCD6958fC1E": { "balance": "10000000000000000000000" },

"0xDdf06643ec9970568424c690c27462e090B9bd66": { "balance": "10000000000000000000000" },

"0x9929ff47aDFb2D3D2Ab5D65E156d9a9EA46B5435": { "balance": "10000000000000000000000" }

}

}

"name" see previous chapter 3.3.5.1 e.g. “becke-ch--parity-chain--s0-v1”

"stepDuration" determines the lowest interval between blocks in seconds, too low might cause reorgs if the system clocks are not synchronized, too high leads to slow block issuance.

”validators"

: {"list":

["0xDfBad97d7AD160804762c36C255082a705D985E8","0x1C8592BFB4f2A842fC2eFBEB54463638739c2B93","0x169b1feCEb85044b36d6B7A3f2076Af6b372843A"]}":

is the list of addresses of the authorities which will be allowed

to issue blocks. Initially this list contains the initial founders

of this authority round / chain. These accounts were sent to the

administrator in the previous step. This list will grow as more

authorities join the round respective organization. For additional

information see chapter 3.3.6.2.

The account

“0xDfBad97d7AD160804762c36C255082a705D985E8”

belongs to the administrator.

Instead of changing the “list”

every time a new authority is added in addition I suggest to

specify:

"safeContract" Address of a non-reporting contract that indicates the list of authorities – see chapter 3.3.6.2 and 3.3.6.2.1. OR

"contract” Address of a reporting contract that indicates the list of authorities and enables reporting their misbehavior – see chapter 3.3.6.2 and 3.6.1.5. And decides on consequences e.g. blocking them temporarily or banning them entirely. OR

“multi”: Combines these approaches: “list, “safeContract” and “contract”. in the example below we start with a hardcoded “list” of authorities in block “0”, switch over to a “safeContract” based authority list at block “10” and finally switch over to a “contract” based authority list at block “20”:

"validators" : {

"multi": {

"0": { "list": ["0xc6d9d2cd449a754c494264e1809c50e34d64562b"] },

"10": { "safeContract": ["0xd6d9d2cd449a754c494264e1809c50e34d64562b"] },

"20": { "contract": "0xc6d9d2cd449a754c494264e1809c50e34d64562b" }

}

}

"accounts"

contains the standard Ethereum builtin

contracts (ecrecover,

sha256,

etc.), required

to use the Solidity contract writing language. And

additionally (for reason of security and separation of concerns)

the accounts section contains the user-accounts of the

individual authorities that were sent to the administrator in

the previous step (3.). And these are as well the accounts to which

the administrator initially assigns ether as part of the chain

configuration.

The user account

“0x26D9E695Bd95fD6cd9e2C08Fc3450FCD6958fC1E”

belongs to the administrator.

The administrator prepares the node configuration as shown in chapter 3.3.4, 3.3.4.1 and store it in a file. The bootnode line is commented because (of course) we don’t know its value until the first time we’ve started the node: